User talk:Dragons flight/Log analysis

| Editing trends (inactive) | ||||

| ||||

| On 11 October 2007, User:Dragons flight/Log analysis was linked from Slashdot, a high-traffic website. (Traffic) All prior and subsequent edits to the article are noted in its revision history. |

This page has been mentioned by a media organization:

|

Initial comments

[edit]The claim the upload rate is decreasing is misleading. Rather, more traffic is shifting to commons and it's seeing an increase in uploads which probably more than offsets the decline on enwp local uploads. --Gmaxwell 00:10, 10 October 2007 (UTC)

- Changes on commons may or may not be associated to changes on EN, but the fact that local uploads are decreasing is interesting evidence of... [insert favorite explanation here]. Maybe that traffic shifted, or maybe it just stopped or any of several possible reasons. Dragons flight 00:41, 10 October 2007 (UTC)

- It's pretty easy to demonstrate that a good portion of the change is shifting by instead monitoring the number of images in use on enwp. --Gmaxwell 01:33, 10 October 2007 (UTC)

Where did the article history data come from? How was the subset selected? Some of the results look a little odd: For example, between 2007-09-19 and 2007-09-25 33.6% (294113/874644) of NS0 edits are by anons but your graph looks closer to 45%, though it's hard to tell from the graphs. --Gmaxwell 00:29, 10 October 2007 (UTC)

- By choosing randomly from the page table for namespace=0 and brute force downloading the edit history list as rendered by action=history. The graph shows ~45 out of a total of ~130, which is ~1/3. Nothing wrong there. Dragons flight 00:41, 10 October 2007 (UTC)

- Choosing randomly how? Like "I imported the 2007xxyy dump into mysql and did select page_title from page where page_namespace=0 order by RAND() limit xxxyyy;" or "I just picked things out of recent changes as they went by". Thanks for the clarification on the graphs. :) --Gmaxwell 01:33, 10 October 2007 (UTC)

- Functionally the former. Dragons flight 01:51, 10 October 2007 (UTC)

- Thats still a little vague. Could you please be more detailed? --Gmaxwell 14:35, 10 October 2007 (UTC)

- I used the September dump, but rather than ORDER BY RAND() I used python's random number generator to pick from a list of page ids with namespace=0. I also set it up to prevent duplicate selections. So, it would have the same effect as your query. Dragons flight 16:18, 10 October 2007 (UTC)

- Oh, I also excluded redirects. Dragons flight 16:30, 10 October 2007 (UTC)

- Its well know that popularity of pages on enwiki follows a power law. By sampling randomly you're hitting a lot of pages form the tail of the popularity distribution. I'm wondering about whether you've tried to correct for this when you've scaled the features that you studied, or if you've scaled the features simply by the inverse of the proportion of the pages you've sampled. Thanks for the work -- I really hope that the enwiki dump becomes available soon, especially with results such as these to goad on future studies.Bestchai 21:25, 11 October 2007 (UTC)

- Drat! You beat me too it. I was waiting to say something until I had better data. ;) See below. --Gmaxwell 12:40, 12 October 2007 (UTC)

Not News

[edit]That Wikipedia growth is no longer exponential is not news (on example). You're showing us graphs of the article creation rate and edit rate, which are the first derivatives on the article count and edit count which are the true size metrics. They are flat as of recently and somewhat less than their peak. What should we expect them to be now that we are not exponential? Pretty much Flat. The period of exponential growth for German Wikipedia ended sometime earlier than Enwp, and they are still doing well. I think you're making too big of a deal out of this. In any case, the update is interesting. Thanks!--Gmaxwell 01:33, 10 October 2007 (UTC)

Some thoughts

[edit]First, thanks for this. Are there equivalent stats being produced elsewhere, or not? Greg, where is the report on de plateauing - that sounds interesting? When discussing scaling problems and stuff at RfA, I predicted that plateauing would solve a lot of problems at en. Hopefully if the plateauing remains, consolidation can take place. Also, are there stats available about Commons versus images on en and other language encyclopedias?

- Bots have a low error rate. I think the writing is on the wall...

- There should be an article creation spike due to User:Polbot, but I'm not seeing it. Maybe it is just less visible in the greater noise compared to the time of Rambot and its ilk?

Again, thanks for this. Maybe I can trot out my favorite question - how many deleted (dead) articles are there compared to living ones? Carcharoth 02:18, 10 October 2007 (UTC)

- Since the existence of the deletion log, there have been 1,191,190 article deletions. Some fraction of these are pages that have been repeatedly deleted and others are pages that were deleted but later restored. However, to first approximation there is one deleted article space page for every two real articles. Dragons flight 02:28, 10 October 2007 (UTC)

- Oh. That's a big surprise. Given the huge amounts of deletion that seems to go on, I would have thought that the number of deleted pages would be far larger. It looks like while people are arguing about deletion, lots of stubs are being created incessently in the background. This is almost enough to turn me into a deletionist! :-) What date does the deletion log go back to? Carcharoth 03:32, 10 October 2007 (UTC)

- December 2004. Dragons flight 04:27, 10 October 2007 (UTC)

Mistake regarding reverts

[edit]You say "the majority of reverts are issued by administrators", but I don't think your statistics support that. An average admin makes 3 times more reverts than an average non-admin, but since admins make only a small minority of total edits, the number of reverts is still a minority. --Tango 11:22, 10 October 2007 (UTC)

- Most obvious metadata driven methods of measuring reverts create a bias towards administrator performed reverts (because the rollback button always produces detectable reverts). --Gmaxwell 14:35, 10 October 2007 (UTC)

- I tested my logic against a few articles with long histories, and it appeared to have a high accuracy rate of identifying non-admin revert comments. Yes, there is a potential for bias there, but I don't think it is anywhere near as large as the apparent difference between populations. Dragons flight 16:18, 10 October 2007 (UTC)

- You're right. There are more reverts, in total, by non-admins, while admins do it more often. I've corrected this in the text. Dragons flight 16:18, 10 October 2007 (UTC)

- Much better. :) --Tango 16:57, 10 October 2007 (UTC)

How does your revert detection logic compare to User:ais523/revertsum? I was collecting evidence on revert edit summaries to come up with my own heuristic; is there anything I missed (or anything you missed)? --ais523 15:07, 11 October 2007 (UTC)

Reverts...

[edit]So on an average day there are 13000 reverted edits out of which around 8500 are made by IPs. Not to mention, that at least most of the reverted edits made by admins are editwar - and I guess, for normal users it's not that much different. --TheK 15:59, 10 October 2007 (UTC)

- Yes, roughly 75% of all edits that are reverted come from IPs. Though it noteworthy that IPs supply much more content that is not reverted. Dragons flight 14:45, 11 October 2007 (UTC)

Fabulous!

[edit]Wow, this is great stuff. I can't say I had a burning need to know any of this, but the information is fascinating and raises all sorts of interesting questions. Many thanks for the hard work of collecting, analyzing, and posting it. William Pietri 23:06, 10 October 2007 (UTC) Superscript text

- Fascinating. I look forward to seeing the mainstream media address this, assuming they can understand your graphs. --Milowent 14:22, 12 October 2007 (UTC)

Comparison of Wikipedia namespace editing vs main namespace editing

[edit]Any way of getting that sort of statistic? - Ta bu shi da yu 14:21, 11 October 2007 (UTC)

- I don't currently have info about non-article space editting. Dragons flight 14:44, 11 October 2007 (UTC)

Line to clarify

[edit]"Administrative action records are partly confused by the action of secretive adminbots that have been run by Curps, Betacommand, Misza13 and others." - eh? isn't that strictly forbidden? What evidence is there of this? - Ta bu shi da yu 14:22, 11 October 2007 (UTC)

- Curps and Betacommand aren't currently using adminbots, though I think Curps' bot still holds the all-time blocking record. With regards to Misza13 see: Wikipedia_talk:Non-free_content/Archive_28#Why_bother_uploading_if_a_bot_is_going_to_delete_it.3F. Nor are those three an exhaustive list. Dragons flight 14:30, 11 October 2007 (UTC)

Alexa

[edit]Alexa shows a small drop over the past week, but not larger than several other dips over the past few years. 1of3 14:23, 11 October 2007 (UTC)

Missing number of articles over time

[edit]A graph of just the plain the number of articles over time would add extra meaning to the number of edits over time. --83.83.56.111 17:11, 11 October 2007 (UTC)

More data

[edit]

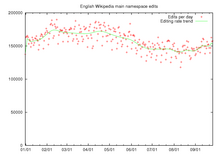

I've done an independent analysis of edit frequency using a different method. I estimated the total number of edits to Wikipedia ever (including reverts, deleted edits, and edits not to articles) by taking the oldid of the last edit to the sandbox before each day UTC (so the edit listed as the number was actually made before), and the difference of that number to the number on the next day, which gives an approximation to the total number of edits on that day (and has the advantage of being cheap to obtain from the servers). The data goes forward to the present and back to 8 July 2005. The raw data is available at User:ais523/Stats, so that people can do better analysis of it than my quick attempts in Excel; the graph seems to show a drop-off in edit rate in about April 2007, supporting Dragons flight's findings. --ais523 11:52, 12 October 2007 (UTC)

- But that's not his claim, his claim is that editing rate is actively declining which is a position not supported by your graphs. The fact that exponential growth stopped is not news, it was previously known, see above, but your graph does confirm that. Your approach is reasonable but can't be limited to a single namespace. --Gmaxwell 12:34, 12 October 2007 (UTC)

- It's a bit of a mixed result, supporting the 'declining' argument in some respects but not in others. From my graph, the rate (the magenta line, or smoothed as the black line) seems to have been declining from about April to August, and is now picking up again, but I don't know if that's a significant result or a fluctuation. Yes, I know my method's limited to the all-namespace all-edit count, but it's interesting to find some data that can't affected by sample bias; I see you have some more data, probably more useful than mine, in the section below, and I may look at those as well. --ais523 12:42, 12 October 2007 (UTC)

- I think it would be most accurate to say that Enwikipedia discontinued its exponential edit count growth at the beginning of the year and now grows linearly with a rate somewhat less than its all time peak. The decrease from the all time peak is statistically significant, but it's still within the regular weekly edit rate volatility.

- The end of exponential growth means that English now grows in a manner more comparable to other large Wikipedias. It was an inevitable change and was widely discussed elsewhere in the march/april time frame.

- In any case, I really think the way you measured was quite smart. Had I thought of it I still would have done what I did, but only because of the namespace filtering issue. I also like that you kept the daily numbers, since it's informative to see how large the daily volatility is. :) Also the cumulative edits line is great, since it corrects the most frequent mistaken impression that comes from looking only at a derivative graph. --Gmaxwell 20:29, 12 October 2007 (UTC)

Because I was interested in seeing where the pattern was going, I've redone the graph containing analysis up to the present. Unfortunately, there was an incident involving the Sandbox history, so the results from October 2007 onwards are now based on WP:AIV, although hopefully this won't reduce the accuracy too much. It looks to me as though what has happened is indeed the end of exponential growth and the beginning of a linear growth phase instead; after the decline in April 2007, the number of edits per day seems to have been approximately constant. --ais523 11:02, 19 February 2008 (UTC)

- By the way, the raw data's at User:ais523/Stats, as before. --ais523 11:03, 19 February 2008 (UTC)

An actual count of main namespace edits

[edit]

The sampling approach Dragons flight used was flawed: It would have worked if the distribution of edits to articles were a symmetrical two sided distribution, but the distribution of edits to articles is roughly power-law.

I took the time to count the actual edits to NS0 in 2007. Anyone with a toolserver account could have done this.

Results are:

- The editing rate is lower than the all time peak: As we've known for a while enwp edit count is not growing exponentially anymore. Summer time has always had slower growth for us. German Wikipedia lost exponential growth about a year ago.

- Dragons flight's approach substantially under-estimate the number of NS0 edits per day.

- The short term behavior is that the editing rate is increasing. (As some suggested it might be based on the alexa graphs)

The data underlying the graph is here. I'll post the full data with a listing of every edit, when it was made, and who made it later along with some better graphs, and an overlay of the page view rate.

Enjoy! --Gmaxwell 12:34, 12 October 2007 (UTC)

- Interesting. Thanks for doing this. Could you take this back to January 05 or earlier? That's the interesting part to me. Also, is NS0 the same thing as the main space? Thanks, William Pietri 15:12, 12 October 2007 (UTC)

- I hope NS0 is mainspace, otherwise my head will explode. Please, please don't use jargon like NS0. It means nothing to most people and is not a widely used phrase. Carcharoth 15:19, 12 October 2007 (UTC)

- Are you including edits to articles that were subsequently deleted, and edits to redirected pages? (For example, by using the revision_id as an indicator of edit rate.) Both of which would have been excluded in my counts. Dragons flight 15:59, 12 October 2007 (UTC)

A comparison of Gmawxell's and my edit count rates, after averaging his counts to weekly values to match mine. His values are systematically higher, which I presume means he is including something I am not (deleted articles?, redirected pages?). He also gets an uptick in the last couple weeks. Since the last available page table is from September 8th, I don't have any articles created after September 8th, which I assume is responsible for that uptick (a larger shift than I would have guessed given the fraction of new articles per week compared to the 2 million existing ones). However, I knew that bit was missing, so in my head I generally figured trend lines as approximately March 1st to approximately Sept. 1st. (Even Greg must agree that this has negative slope.) That also fits in with logging table dump, which again ends in early September.

If Greg wants to assert that the last couple weeks are enough evidence that the decline is over and we are growing again, then that is not unreasonable (though possibly premature and I would like to see a reversal in other indicators as well). However, I disagree with his description of my work as "flawed" and would assert that the "power law" argument made by him and one other person on this page (as a reason for criticizing my analysis) is non-sensical, a random sampling will capture the power law exactly as it should. Dragons flight 16:41, 12 October 2007 (UTC)

- You're not randomly sampling the EDITS you're randomly sampling the PAGES. The edits are not uniformly distributed to pages, so sampling on the pages does not allow you to accurately estimate the editing rate.

- Imagine a room with 1000 people. They each have wallets with money in them the money is randomly distributed with a uniform distribution. You check 5% of their wallets then multiply the result by 1/.05. Doing this you'd get a pretty good estimate.

- Now, imagine the money wasn't uniformly distributed, Say one person has $1,000,000, the next has $500,000, then next has $250,000. Tapering off to $5.. then most people (~980) have about $1-$5. Now sample 5% of the people and multiply by 1/.05. Chances are your will massively underestimate the total money in the room, though there is a small chance that you might massively over estimate the number.

- If you doubt it write yourself a quick script to simulate it.

- The actual distribution of edits to articles isn't anywhere that slanted, but the same general theory applies. *If you randomly sampled edits rather than pages you'd be fine*.

- I'm including deleted edits, since it's somewhat flawed to exclude them when you're talking about the effort put in by people editing but that does not account for the difference, the mean number of deleted edits per day is 7974, which accounts for some but not most of the difference. ... and the difference isn't constant. When I post the detailed data in a bit the deleted edits will be easy to break out if you like.

- As far as the discontinuation of exponential growth goes, I agree that might be news to some folks even though it is months old news but it wasn't some great and shocking discovery of yours. I provided a citation that it was indeed known before (also see [1] and [2]). It was also expected: A failure to grow exponentially forever is not a decline, however, and it's highly disputable to characterize it as one.

- I don't consider the recent upticks to be a resumption of exponential growth. I doubt exponential growth will or can resume. German Wikipedia hasn't seen a resumption of exponential growth. Rather, it's an increase which we've seen every year that coincides with school schedules in some populated places.

- Please understand, I appreciate the work you've done. You could have done it better with less work had you solicited and accept the some help of other folks. What I'm mostly concerned about about is that you've decided to couple it with a shocking and potentially misleading headline. Thats irresponsible. It's bad science.

- ... and incidentally, you've used a non-zero index graph above. Certainly you must know that doing so increases the apparent change, as it's a classic way of lying with data (example 3). You might want to fix that... :) --Gmaxwell 19:59, 12 October 2007 (UTC)

- For what it's worth, zero-based graphs can be another way of lying with data. The lie, zero-baselined or not, comes when the graph's baseline or proportions are different than the reader's mental baseline, giving them a false intuition. His graph is reasonable to use if the context is, "let's make a close comparison of X and Y to see how they relate," which is how I took it. If not, then any lie here is in your favor, as presumably he'd be trying to minimize the apparent different between the two methods, not maximize them, which is what a non-zero baseline does here. William Pietri 21:08, 12 October 2007 (UTC)

- Ah, I saw it as trying to show that both demonstrated this claimed ongoing decline. In terms of difference it would be most accurate to state this my numbers were 13% higher on average (or whatever the number actually is). It would be easiest to argue that the error was roughly constant using a table on the difference, but alternatively a difference line plotted on the same scale would also be fairly informative. ... Since I'm not sure what multiplier(s) he has used, I can't reproduce his graphs. --Gmaxwell 21:27, 12 October 2007 (UTC)

- For what it's worth, zero-based graphs can be another way of lying with data. The lie, zero-baselined or not, comes when the graph's baseline or proportions are different than the reader's mental baseline, giving them a false intuition. His graph is reasonable to use if the context is, "let's make a close comparison of X and Y to see how they relate," which is how I took it. If not, then any lie here is in your favor, as presumably he'd be trying to minimize the apparent different between the two methods, not maximize them, which is what a non-zero baseline does here. William Pietri 21:08, 12 October 2007 (UTC)

- William is correct that my point was to show the comparison. I have been scaling to 2 million articles exactly. It occurs to me now that this might be a good chunk of the discrepancy. Wikipedia's official "article" count is close to 2 million (within a few percent, and even closer a couple weeks ago) but it occurs to me that I'm not sure how many main namespace pages exist but aren't counted towards that 2 million. (I'm not in a position to figure it out till later today).

- As to the other point, I reiterate that the sampling bias is essentially neglible (much smaller than the fluctuations being observed). Obviously, if one measures the edit history of every page you must get a correct total edit count, regardless of the distribution of edits per page. As one samples randomly from the page population you introduce an uncertainty. Since the edits per page distribution is non-uniform that uncertainty is skew (substantially in the case of an exponential population). However, one has to consider the size of that uncertainty, and for a sample of 100,000 out of a population of 2 million, I contend that the error in the estimate of the total number of edits is neglible. As an approximation, consider a set R of 2 million uniform random numbers chosen between 0 and 1. Let the A be the set such that a = 1+25*log(r)^2 where a is in A and r is in R. The set A is then a collection of random number between 1 and infinity with mean ~51. For my particular instantiantion of R, I get an A with values between 1.0000 and 7482.3, and mean = 51.0131, median = 13.0158. This distribution is roughly as exponentially skew as the true edit per page population, though yes, one could come up with better approximations. The sum over my A is 1.0203×108. So let's sample 100,000 members of the 2 million in A and ask what error one makes towards the total sum via sampling. Fractional error in ten random samples: 1.06%, 0.36%, 0.039%, -0.68%, -0.015%, 0.038%, -1.08%, 0.14%, -0.40%, -1.63%

- The point here is that 100,000 is a large sample. Yes, non-uniform distributions lead to non-uniform uncertainties, but in the limit of sampling everything you'd still have to get the right answer. 118,000 articles is a large enough sample that expected sampling bias towards edit counts as a result of the non-uniform edits per page distribution is still tiny. Test it yourself, if you still don't believe me.

- I stand by my characterization. The decline is shocking to me. If we were looking at this back in November last year, when we were still on a strong upslope, I contend there is virtually no chance that any of us would have guessed that the edit rate now would be similar to what it was then. Congratulations to yourself, and Andrew, etc., for noticing the changes first, but the history of the last year is not one that speaks to me of stabalization at the end of a long exponential growth, but rather of moving backwards, i.e. a decline. We could have leveled off gradually, but in my opinion, we did not. I feel that it would have been more irresponsible to be aware of these changes and not try to draw the community's attention to them. Dragons flight 22:24, 12 October 2007 (UTC)

- Thank you for admitting the straight dope on the sampling bias. I agree that the error should have been fairly small considering the size of the sample and the actual distribution of edits to articles, which was why I said nothing about it until I could actually get unsampled measurments for comparison.

- The difference turned out to be pretty large: An average of 10.8% if I assume you used a correction factor of 2000000/118793.

- Sizing the number to correct against should have been fairly easy, you randomly selected the articles from a big list of articles, ... how long was the list? :) If the error in your results were only due to picking the wrong value, we would expect our results to differ by a constant factor, but the factor between your numbers (assuming you used 2000000/118793) and the actual swings between 0.8653 and 0.9421. ::shrugs::

- It's fair enough for you to say that it's shocking to you... thats you're own opinion. But the difference isn't large compared to the number of edits that we already have. If you look at a graph of the total number of edits (see the blue line in Ais523's graph above) you can't even see any obvious change in the slope. The difference in the activity between now and the peek is similar to typical noise within a week (On average the weekly minimum is 15% smaller than the weekly maximum). I think that it's easy to argue that the rate is now constant with a fair amount of noise and some seasonal variability.

- And thats not to say that a now roughly constant editing rate isn't interesting ... It is, but it was covered previously without the level of concern you've applied to it. When did you expect the near-exponential growth to stop, and why? The notion that something must be wrong in order for us to see the growth rate go constant would seem to depend on the idea that the exponential growth could and should continue forever.

- In nature many exponential things stop being exponential in a fairly abrupt way when they reach saturation. In a one month window (roughly mid Aug. to mid Sept.) English Wikipedia was edited by ~950,000 distinct IPs. We can't be sure what sort of inflation comes from dynamic pool jumping, or what reduction comes from address sharing due to nats, but typical numbers quoted to advertisers based on cookie counting indicate have actual people at 2-4x distinct IPs. Lets just assume 1x, or a distinct IP == distinct person.

- It seems completely reasonable to me that the world can only support about that many people actually choosing to make an edit to Wikipedia per month and that we hit that limit around March, then there was a small decline in activity as the normal new user attrition took some of the last large growth batch along, combined with the regular summer decline.

- User registration patterns seem to support this argument too, but they are somewhat sullied by the fact that until the new year it was advantageous to create a lot of accounts in order to evade blocking because of how autoblock worked.

- Unfortunately I can't get historic distinct IP data. :( But all in all I think this hypothesis is a heck of a lot simpler and more likely than some weirdness involving Essjay. --Gmaxwell 23:36, 12 October 2007 (UTC)

Issue with 6% random sample

[edit]Assume that early pages entered into Wikipedia tended to be topics of more general interest, and over time people added more specialized topics. Further assume that the topics of most general interest get more ongoing edits. These plausible assumptions could lead to the phenomena reported even if overall activity is the same or increasing. Over time, a higher and higher proportion of articles taken by a random sample will be niche articles showing a lower rate of activity.

By analogy, assume an omniscient being periodically used 6% samples to observe how often Encyclopedia Britannica articles were read, and inititally there were only Macropedia articles, with Micropedia articles gradually added. At first a high rate of reading of the popular general Macropedia articles would be observed. Later the sample would be mostly the more numerous Micropedia articles; a lower rate of reading would be found. Yet the overall rate of reading could be the same or even be rising. —Preceding unsigned comment added by 131.107.0.73 (talk) 14:57, 12 October 2007 (UTC)

Sampling is not an issue

[edit]

I've plotted the full edits-per-day history of the English Wikipedia. The graph shows exactly the same dynamics. Gritzko 16:59, 18 October 2007 (UTC)

- Dragons flight's numbers disagreed subsubstantially with the non-sampled data. It didn't change the shape of the graphs but the numbers were most certainly off. --Gmaxwell 20:45, 18 October 2007 (UTC)

Any conclusions yet?

[edit]Can we conclude that the initial analysis was flawed by incorrect sampling (no discredit to Dragons flight - without the initial analysis, the later analyses would have either not been done, or done later)? If so, how do we put the genie back in the bottle? I predict that a myth has been born here - that the rate of Wikipedia edits and contributions is declining. I see this has been reported on Slashdot - any chance of corrective reports going out to various places? Carcharoth 15:30, 12 October 2007 (UTC)

- I disagree with the "flawed" label (see above). Obviously, I can't control the reporting elsewhere, but I think the "decline" is news to most people (Gmaxwell's protestations about it not being news, notwithstanding). Whether or not it is still "declining" is an important point for us (and one I am sure Wikipedians will be looking at for some time), but I think you'd have a hard time convincing any news outfit to publish a correction about how we declined X% since March but we have gone slightly upward (in some metrics) in the last month so don't hold the previous losses against us. Since we are still well below the peak, I'd think such a correction (even if justified) is unlikely to see the light of day. Dragons flight 17:01, 12 October 2007 (UTC)

I think, in order to better draw conclusions, it would be helpful to have a graph that charts site traffic against edit rate and article creation rate. We need to see how much of the pattern is driven by the underlying traffic patterns, and how much is caused by content saturation, policy changes, community changes and the like. Or even better, edit rate and article creation rate scaled to account for traffic.--ragesoss 17:52, 12 October 2007 (UTC)

- Also exciting is to graph the overall traffic on the internet vs Wikipedia traffic. A while ago some people observed that the page view traffic has moved closely along with internet backbone traffic. :)--Gmaxwell 20:04, 12 October 2007 (UTC)

- I'm sure I should know what internet backbone traffic is (it sounds rather exciting). Ah, I see we have an article. So what does that interesting observation actually mean? I'd hazard a guess that Wikipedia is an information parasite feeding off the "interconnected commercial, government, academic and other high-capacity data routes". :-) Carcharoth 03:41, 13 October 2007 (UTC)

- Alternatively, Wikipedia is evolving into God? (easter egg joke). Carcharoth 03:44, 13 October 2007 (UTC)

- I'm sure I should know what internet backbone traffic is (it sounds rather exciting). Ah, I see we have an article. So what does that interesting observation actually mean? I'd hazard a guess that Wikipedia is an information parasite feeding off the "interconnected commercial, government, academic and other high-capacity data routes". :-) Carcharoth 03:41, 13 October 2007 (UTC)

new page creation vs. abilities of anons to create new pages

[edit]When was it again that anons were no longer allowed to create new pages? This may explain the drop in the number of new page creations. Just a thought -- Chris 73 | Talk 16:23, 12 October 2007 (UTC)

- December 2005, ... it did result in a reduction in new article creation for a while, but that was soon hidden by the overall growth rate. It's fun to see what it did to account creation [3]. ;) --Gmaxwell 20:35, 12 October 2007 (UTC)

Might rel="nofollow" be the cause of activity decline?

[edit]Maybe Wikipedia's activity decline is caused by adding rel="nofollow" to the each external link in articles?

It's about motivation: people do love adding the back-links leading to their sources. So, when Wikipedia attached the rel="nofollow" to each outbounding link, people lost a part of their interest in adding content.

Could it be the cause? - Rajaka 19:16, 12 October 2007 (UTC)

- And why would they have lost interest? --Gmaxwell 19:21, 12 October 2007 (UTC)

- Because with "nofollow" their external links are nothing for Google now, but many people want them to be meaningful - Rajaka 15:17, 15 October 2007 (UTC)

- Do you mean, that adding nofollow would have greatly reduced the amount of spam edits? If so, then that's evidence that it was a good idea. --ais523 13:51, 13 October 2007 (UTC)

- I think this can be a case. However, to prove it, deeper stats analysis is needed. - Rajaka 15:17, 15 October 2007 (UTC)

Hypothesis

[edit]A nice collection of data there. I suspect that it results from a few different factors.

I think a major consideration is that the "easy" topics are increasingly already covered pretty thoroughly. I've heard some refer to this as the low hanging fruit that has already been picked. If new readers don't see a need to contribute, they won't stick around. This would also explain the higher percentage of reverts, as Wikipedia's growing exposure still attracts vandals and POV warriors looking for a high-profile way to show off or spread "The Truth".

This may become an increasing issue, if we can't find ways to keep those who will make valuable contributions and discourage problem editors. These types may also accelerate the problem by wearing down and driving away valuable editors who have already been contributing, too.

As pointed out before, Wikipedia couldn't grow forever. I think this may be another sign that we need to re-evaluate Wikipedia's purpose and processes (i.e. Flagged revisions). Sχeptomaniacχαιρετε 21:04, 12 October 2007 (UTC)

- The low-hanging fruit for new articles may well be gone, but for improving articles? Per Wikipedia:Version 1.0 Editorial Team/Work via Wikiprojects, of the roughly 910,000 articles whose quality has been assessed by WikiProjects, only about 50,000 were rated "B" class or better; the remaining articles are either "Start" (230,000) or "Stub" (630,000). -- John Broughton (♫♫) 21:29, 12 October 2007 (UTC)

- Having worked on some articles in the past week, and observed that the lag time between an article being edited and the rating being updated is shockingly slow, I'd say that many ratings are out-of-date. Most editors (me included) won't change ratings based on their own work. Given the sporadic nature of Wikipedia updating for low-traffic articles, it also seems unlikely that articles are systematically re-rated unless you shove them in front of people and say "please assess this". I'd say only the high-traffic areas are carefully updated in this way. There should probably be much more of a culture of changing ratings (if they exist) when de-stubbyfying or doing major expansion or referencing, or an acknowledgement that ratings only really mean something after periodic "rating drives" (or rather "re-reating drives") by said WikiProjects. ie. big lag times of half a year or more for large WikiProjects. This is another reason to discourage WikiProjects from trying to include too many articles under their scope - they need to focus on improving a limited number regularly, rather than a lot infrequently. Carcharoth 03:39, 13 October 2007 (UTC)

Another hypothesis is the "outing" of Wikipedia's liberal deceit. Anyway, that's what folks at http://www.conservapedia.com think. --Uncle Ed 19:01, 13 October 2007 (UTC)

- Yeah, it's due to Conservapedia. I'm sure that's the reason. Brilliant. R. fiend (talk) 16:37, 21 November 2007 (UTC)

Other slices

[edit]Just curious, has anyone ever seen trend charts on the following sets of data?

- Quantity of mainspace articles added per day, v. quantity of mainspace articles deleted per day?

- Average "lifespan" of editing account, from first edit to last edit?

- Average lifespan of administrator account, from adminship to burnout/disappearance?

- A piechart showing % of articles by general topic area? Sports, entertainment, history, biography, uncategorized?

- A trend chart of the above? My guess is that it would show the % of sports/entertainment/pop culture articles overtaking history, but not sure.

My own field is in Multiplayer games (I'm the General Manager of Online Community at play.net), and I'm seeing many of the same patterns on Wikipedia, as in MMOs. The falloff in numbers right now would be equivalent to the end of what we call the "novelty spike" in gaming, meaning that when a game first opens, it gets a big rush of attention, and then things drop off. It doesn't mean the game is bad, it just means the novelty is over. :) Also in gaming, the typical person "plays" a game from 6-18 months, though of course many play less, or stick with a game for decades. I'd love to see some data to see if similar patterns are showing up here.

Just curious, thanks, Elonka 18:19, 15 October 2007 (UTC)

- Hm. I posted some lifespan graphs in the past but I can't find them now. I should probably remake them. At the time I looked before, accounts that survive past a starting threshold tended to stay around.

- Can you suggest a good way to classify articles? I tried using "the shortest path from a top level category to the article page" but that obviously misclassifies a lot due to semantic drift and outright breakage in the category hierarchy. At the time I was only trying to seperate hard science from pop-culture and I found the approach too unreliable.

- --Gmaxwell 18:39, 15 October 2007 (UTC)

- Following the category tree strikes me as the best way to do it. I'd start with something like Category:Main topic classifications, and just see what you get on a first pass. Maybe also build a table with all the existing categories, and then you could manually "tick" or "untick" certain sections if they were distorting the data. Might also be necessary to target lower subcategories such as Category:Entertainment or something that's less ambiguous, if you run into lots of conflicts. :) --Elonka 18:58, 15 October 2007 (UTC)

- The category system has some weird diversions however. For example, I recently encountered Sports->Water sports->Sailing->Winds->Wind power->Wind turbines->Wind turbine manufacturers, which tells us that General Electric is a sport. ;-) In fact there is so much lateral movement that a path exists from Category:Sports to a very large fraction of all Wikipedia articles. Dragons flight 20:00, 15 October 2007 (UTC)

- True, but couldn't you flag certain keywords as "primary"? For example, GE or any other company is probably going to have a "Companies" category: "Companies on this stock exchange, companies founded in <year>," etc. You could set your algorithm to scan for one of those "clear" identifiers, and then ignore the other categories and move on. So Tony Hawk would get as far as "American skateboarders" and Dalai Lama would get as far as "Lamas", and you wouldn't need to trace any of the other categories on the page. --Elonka 20:04, 15 October 2007 (UTC)

- There are ~282,440 categories. Who will tag 'primary ones'? ... and besides, many categories are 'primary' in one use and not primary in another. For example, we have articles where Category:American_cheerleaders is the primary category, but thats probably not the case for George W. Bush (hey .. wait a minute!). So really each article would need to be adjusted.. Now we're up to 2million things to check. :( It's not impossible to get an approximate answer, but I've tried (see above) and the simple and obvious measures produce obviously bad results. --Gmaxwell 21:04, 15 October 2007 (UTC)

- Just brainstorming here: I wonder if we could request folks to put a "primary" category as the first category on an article? Then all your tools would have to do would be to scan that one? Or would that just lead to endless battles as people then had something to new argue about? "It's a game! No, it's a beer! No, it's a sport-drink!" Blah. :/ --Elonka 21:26, 15 October 2007 (UTC)

- There are ~282,440 categories. Who will tag 'primary ones'? ... and besides, many categories are 'primary' in one use and not primary in another. For example, we have articles where Category:American_cheerleaders is the primary category, but thats probably not the case for George W. Bush (hey .. wait a minute!). So really each article would need to be adjusted.. Now we're up to 2million things to check. :( It's not impossible to get an approximate answer, but I've tried (see above) and the simple and obvious measures produce obviously bad results. --Gmaxwell 21:04, 15 October 2007 (UTC)

- True, but couldn't you flag certain keywords as "primary"? For example, GE or any other company is probably going to have a "Companies" category: "Companies on this stock exchange, companies founded in <year>," etc. You could set your algorithm to scan for one of those "clear" identifiers, and then ignore the other categories and move on. So Tony Hawk would get as far as "American skateboarders" and Dalai Lama would get as far as "Lamas", and you wouldn't need to trace any of the other categories on the page. --Elonka 20:04, 15 October 2007 (UTC)

- The category system has some weird diversions however. For example, I recently encountered Sports->Water sports->Sailing->Winds->Wind power->Wind turbines->Wind turbine manufacturers, which tells us that General Electric is a sport. ;-) In fact there is so much lateral movement that a path exists from Category:Sports to a very large fraction of all Wikipedia articles. Dragons flight 20:00, 15 October 2007 (UTC)

- As another example, University of Maryland, College Park is three (geographically based) categories removed from Cat:Colleges and Universities, but only two cats removed from Cat:Sports leagues, thus clearly the University of Maryland is "more" a sports league than a university. The category structure does not easily translate to simple partitioning that a machine can work with. Dragons flight 21:54, 15 October 2007 (UTC)

- (more brainstorming) What about instead of analyzing the articles, analyzing the categories? Could we start at Category:Main topic classifications, pick one, and then figure out how many children subcategories that it has? --Elonka 23:19, 15 October 2007 (UTC)

- As another example, University of Maryland, College Park is three (geographically based) categories removed from Cat:Colleges and Universities, but only two cats removed from Cat:Sports leagues, thus clearly the University of Maryland is "more" a sports league than a university. The category structure does not easily translate to simple partitioning that a machine can work with. Dragons flight 21:54, 15 October 2007 (UTC)

- Elonka - is there any academic research on the trends of participation in MMOs that you mention above? Can you suggest some? -- !! ?? 11:36, 16 October 2007 (UTC)

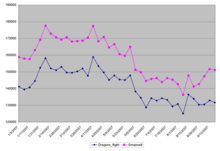

Article creation vs Deletions

[edit]The first part of the question abouy Quantity of mainspace articles added per day, v. quantity of mainspace articles deleted per day has not been discussed above. Compare these numbers of these graphs:

|

|

About 1700 articles are created a day (not included deleted articles) and at peak (jan07) about 2000 articles were deleted a day. So actually about 3700 articles were created and half of it got deleted. HenkvD 19:52, 16 October 2007 (UTC)

- You can't simply take 'number of deletions' to mean 'number of deleted articles'. If I move an article and then delete the redirect thats technically deletion, and every time someone does a revision deletion thats a deletion... Because of factors like these it'd difficult to get an accurate count of the number of articles in the past. --Gmaxwell 13:58, 17 October 2007 (UTC)

Anons and reverted edits

[edit]I think that the community's perception of anon editors makes them more likely to be reverted. I often edit without bothering to log in, for a number of reasons (mostly editing from public computers). When I am logged in, my edits are very rarely reverted. When I make the same sort of edits without being logged in, people use rollback on them, and I have been blocked more than once. Because of how anons are perceived, I am more likely to explain changes at length in talk. But my edits are far more likely to stand if I make the edit as a logged in user and don't bother to explain it. As your analysis shows, anons contribute a lot of content. But an awful lot of editors feel that they are free to treat them like shit. True, lots of vandalism is done by anons, but so are a lot of good contributions. Maybe it's the cavalier attitude towards anons that's driving away potential new editors. 72.200.212.123 14:05, 16 October 2007 (UTC)

- What you're saying isn't new to me: I've experienced it first hand. But what should we do? Is this actually an argument for turning off anonymous editing under the theory that a bad first impression is harmful enough that it doesn't offset the benefit of making things easier for new users? --Gmaxwell 14:01, 17 October 2007 (UTC)

Obscure topics and information availability

[edit]As time goes on, and the "low hanging fruit" is picked and the easy articles are created, it becomes more and more challenging to find topics to create articles about, as noted above. I also will note that a lot of this is driven by the easy availability of online sources to draw from for articles. If it is easy to get online information about a topic, then an editor is more likely to write an article about it. The availability of online information is growing, but there are many factors involved:

- There are several projects to digitize books and make them available online. Slowly more and more of this material is available.

- magazine, newspaper, radio, television and other media are struggling to decide what kinds of information to make freely available, and what historical information to make available.

- Some organizations are taking previously freely available information and making it less accessible by charging for it

- Some libraries are making this information that is only available by subscription more freely available

- Not only is the easily searched part of the internet growing, but the deep web as well. Our tools for probing both the main part of the internet and the deep web are evolving.

- As some things lose their copyright protection, they become more widely available.

- Governments, businesses and other organizations are putting more and more information online

I have often started to write an article, and then realized that I do not have good access to sources online for the article. And I abandon it. If I have good sources, then I continue and make an article.--Filll 15:20, 16 October 2007 (UTC)

- You might be right! On Wikipedia:Modelling Wikipedia's growth the proposed logistic growth model is based on:

- * more content leads to more traffic, which in turn leads to more new content

- * however, more content also leads to less potential content, and hence less new content

- * the limit is the combined expertise of the possible participants.

- The last point refers to the knowledge of the participants, but more likely it helps a lot if information is available online! HenkvD 19:36, 16 October 2007 (UTC)

Real article

[edit]Can this all be collected together and used to produce a real article for Wikipedia? It is clearly fascinating.--Filll 15:27, 16 October 2007 (UTC)

Could the decline be related to page protection?

[edit]The decline starts at about the same time that, as I recall, the less-prominent semiprotection and protection templates started to become widely used. It used to be the standard to use Template:sprotected, which was highly visible and invited users to register. Now we use these tiny little icons in an easily-overlooked part of the screen. There are a few ways to indirectly measure whether our preference for the less-prominent template has affected the rate of new registrations. One method to do usability testing to determine whether people understand why they can't edit semi-protected pages, whether they realize they can edit the page if they register and wait, and whether they are more likely or less likely to choose to edit Wikipedia after viewing one template or another.

Another method is to look at trends in the number of edits to semiprotected pages over time, particularly from new accounts. I.e. count how many edits were made on date x on pages that were semiprotected on date x. Is there a way to filter these results just to semiprotected pages and see what that graph is like?

It would be best to use both methods. One thing that is difficult to measure just by looking at logs is whether a particular semiprotection template on page x makes one less likely to edit page y, as would happen if people can't find the Edit button on a semiprotected page and don't look for it again. Kla’quot (talk | contribs) 07:01, 23 October 2007 (UTC)

Compare two user's edit times?

[edit]Hello Mr./Ms. Dragons flight pretty graphs, very interesting. I just saw this:

Do you know if there is any tool to compare the daily times that a wikipedian has been editing? If not how do you go about doing this?

- Have you ever considered writing a "how to" for new users? Thanks. Travb (talk) 11:59, 28 October 2007 (UTC)

Regarding reverted edits...

[edit]I notice that it appears that this analysis does not account for the possibility that one reversion may undo multiple edits, based on the description of what is considered a "Reverted edit." Dansiman (talk|Contribs) 09:25, 28 December 2007 (UTC)

P.S. A suggestion for future analyses of this type: After identifying an edit as a revert, it should be possible to identify which previous edits' diffs negate the revert edit. Granted, this would take some pretty complex coding, but I'm sure a dedicated programmer (not me!) could do it. Dansiman (talk|Contribs) 09:54, 28 December 2007 (UTC)

Image upload and deletion stats (2007)

[edit]Those reading this analysis may also be interested in Wikipedia:Non-free content criteria compliance#Weekly uploads and deletions and bot taggings. Discussion should be taking place at Wikipedia talk:Non-free content criteria compliance. Thanks. Carcharoth (talk) 01:41, 18 February 2008 (UTC)

New users graph

[edit]That's very interesting. Apparently, I joined right at the pinnacle. I wonder if we could do a sample of how many user accounts are created (and kept dormant) compared to those that become active editors. If wonder if there would be a peak at certain times, or if it's totally proportional to the accounts created. нмŵוτнτ 03:35, 19 February 2008 (UTC)

Suggestions for more analysis

[edit]In thinking about Wikipedia, one of the things I wondered is "what percentage of articles created survive?" From your graphs, it looks like article deletions are a substantial percentage of article creation, suggesting that the average Wikipedia article is in fact one that has been deleted. Is that the case?

Of course, given that any given article might be deleted tomorrow, in some sense this is hard to answer, so perhaps there are interesting graphs counting the lifespan of articles. I'd guess we'd see heavy infant mortality, where a lot of newly created articles are quickly deleted. But I don't have any idea what the rest looks like, or what the distribution is.

There's an interesting related set of questions around the lifespan of edits. A bunch of edits are reverted quickly, which is the same sort of infant mortality. I'd guess that the average edit is one that has already been replaced. But it'd be interesting to see what the lifespan distribution of edits is, and to see what the survival factors are.

Regardless, thanks again for these graphs. William Pietri (talk) 22:31, 30 March 2008 (UTC)

update please?

[edit]It's a year later. What's happening with the trends? (p.s. good analysis.) —Preceding unsigned comment added by TCO (talk • contribs) 17:24, 23 August 2008 (UTC)

Userbox wars

[edit]Another thing that was happening around the same time was the userbox wars. This was really key in that you had a policy change with non-consensus and in fact strong and passionate oppostion pushed through because "higher ups" wanted a change. This came out of an attitude that a small number of editors were creating the bulk of the content even though later analysis showed that: -- lots of people work on a small number of articles forming the core of these articles -- a small number of people revise / wikify content and bring it into compliance That is the bulk of users were devalued. This also resulted in a shift towards deletionism at wikipedia. The most notable example was images which were free of licensed for wikipedia but not redistributable under the GFDL were deleted without replacements first being secured. That is content was destroyed en masse.

There were a flood of users in 2006, wikipedia at the same time was trying to develop a more rigid culture. So the new users found they didn't like wikipedia's culture. Their complaints weren't taken seriously, there were no meaningful vehicles to address policy concerns and so it entered a slow growth phase. People wanted wikipedia to become a more mature product and it has. jbolden1517Talk 23:20, 22 January 2009 (UTC)

Editors per month

[edit]Are there any data on the absolute number of accounts and IPs that contribute within a given period? Thanks (and nice work!). --Tom Edwards (talk) 12:21, 9 July 2009 (UTC)

For people still watching this page...

[edit]I redid my stats, to see what changes had happened over the last couple of years. See File:Wikipedia_approx_total_edit_count_over_time_graph_3.png and the discussion at Wikipedia:Village_pump_(miscellaneous)#Wikipedia_editing_stats_over_time. --ais523 19:28, 29 October 2010 (UTC)

Vandalism Studies Update - August 2012

[edit]Hello, members of the Vandalism Studies Project! As some of us are quite new with the Vandalism Studies project, it would make sense for us to re-read some of the past studies, as well as studies outside the project. Please do so if you have a chance, just so we can get into the groove of things. We're planning on attempting to salvage the Obama study (or possibly simply convert it to a new Romney study), as well as hopefully begin our third study this November. If you have any ideas for Study 3, please suggest them! If you have any questions please post them on the project talk page. Thanks, and happy editing - we can't wait to begin working on the project! --Dan653 (talk) and Theopolisme :)

11:31, 24 August 2012 (UTC)

If you would like to stop receiving Vandalism Studies newsletters, please remove your name from the member list.