Talk:Rotation matrix/Archive 2

Comments on the definition of a rotation matrix

[edit]As far as I can tell this article on the rotation matrix was created in 2004 and by 2005 the signs on the off-diagonal terms had already been changed to try to find a consistent definition. The current talk page goes back to 2006 with many editors citing references for the location of the minus sign on both sides of the diagonal. In the past I have been reluctant to wade into this discussion, but the recent flare up has moved me to offer the following.

Maybe it will help to begin by saying both views are correct, which sounds strange but hopefully allows us to focus on what are actually two different ways of viewing a rotation: (i) a transformation from coordinates in one frame to coordinates in another frame, and (ii) a transformation between two sets of basis vectors defining coordinates in the same frame. The two formulations are closely related and perhaps it is no surprise that they result in matrices that are inverses of each other, which for rotation matrices means the transpose of each other so they differ only in the location of the minus sign above and below the diagonal.

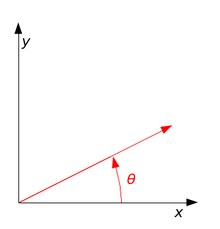

(i) Consider the first case, where a reference frame M is rotated counter-clockwise by the angle θ relative to a reference frame F. A vector in M has the coordinate x=xi + yj, where i=(1,0) and j=(0,1) are the natural basis vectors along the coordinate axes of M, so x=(x, y) in this reference frame. Now consider the coordinates X=(X, Y) of the same point but now measured in F. This is easily done by considering the vectors er=(cosθ, sinθ) and et=(-sinθ, cosθ) that are the images of i and j of M, but now measured in the frame F, so X=xer+yer, or

This version of the rotation matrix has the vectors er=(cosθ, sinθ) and et=(-sinθ, cosθ) as column vectors, which means the minus sign is located on the upper right sine term.

(ii) Now consider the second case, where the vectors are measured in the same frame F, so i and j are the natural basis vectors along the x and y axis of F, and we have the unit vectors er=(cosθ, sinθ) and et=(-sinθ, cosθ) measured in F that define a pair of orthogonal unit vectors rotated by the angle θ in the counter clockwise direction. Now, the transformation from coordinates relative to the basis vectors i and j of F to the new basis vectors er=(cosθ, sinθ) and et=(-sinθ, cosθ) in F, is easily defined by the computing i=cosθer-sinθet j=sinθer+cosθet. This means a vector X=Xi+Yj in F is transformed to a vector in the rotated basis, by the transformation,

Now the columns of the rotation matrix are the coordinates of the natural basis vectors i and j as measured in the new basis er and et. This places the minus sign on the sine term below the diagonal.

Hopefully, it is clear that the two ways to define a rotation matrix are inverses of each other, and they differ by the location of the minus sign. This same situation applies to rotations about each of the coordinate axes in three dimensions. The first case results in rotation matrices that have the minus sign above the diagonal for Rx and Rz and below the diagonal for Ry, while the second case yields the transpose of these matrices, with the minus sign below the diagonal for Rx and Rz and above the diagonal for Ry. I hope this is helpful. Prof McCarthy (talk) 05:06, 19 April 2013 (UTC)

- Actually, there are several possible sources of ambiguity that need to be considered (passive/active rotation, column/row vector convention, left/right handedness). Anyway, your terminology is somewhat confusing. It is misleading, in my opinion, to state that, in your second example, "vectors are measured in the same frame F". What do you mean exactly? Do you agree that when you change basis you change frame? Paolo.dL (talk) 08:17, 5 May 2013 (UTC)

- There is a lot of room for confusion on this point. The analysis of rigid body systems such as linkage systems and robots generally uses separate reference frames attached to each body in the system, such as outlined in the Denavit-Hartenberg convention. However, traditional dynamics texts formulate the velocity and acceleration equations for Newton's Law or Lagrange's Equations in terms of basis vectors attached to various bodies that are defined in the base inertial frame. These approaches are closely related but not the same. In the first case, each body has a frame with a natural basis and a coordinate transformation between these frames, while for the second case there is only the base frame with a natural basis and all vectors are defined in this frame though often using alternate orthogonal bases for convenience of the formulation. Prof McCarthy (talk) 21:01, 5 May 2013 (UTC)

- I came to this article from Cauchy stress tensor. I am trying to keep consistency with the convention for rotation matrix. What I see in this article is that it is only addressing rotation matrix for a vector in a fixed coordinate system. The vector is rotating and one wants to find the new coordinates. However, what this article is missing is the rotation matrix for a rotating coordinate system (in other words, if one wants to find the coordinates of a fixed vector in a rotated coordinate system (primed coordinate system). Or if one wants to to a transformation of a tensor. That is what Prof McCarthy is stating. sanpaz (talk) 00:09, 30 May 2013 (UTC)

The difference between active and passive transformations is easy to understand, and clearly explained elsewhere (a summary is also included in this article). A transposition of the matrix suffices to switch from active to passive and vice versa. McCarthy's text makes everything much more complex, raises doubts instead of solving them, and therefore in my opinion is not helpful in this context.

By the way, the difference between active and passive transformations is not the only source of ambiguity in the definition and interpretation of a rotation matrix. See Rotation matrix#Ambiguities for details.

Paolo.dL (talk) 13:05, 30 May 2013 (UTC)

- Thanks Paolo, I did not know about the active and passive transformation page. It seems that page should be merge into this article. What do you think? sanpaz (talk) 16:07, 30 May 2013 (UTC)

- You welcome. Active and passive transformation is not only about rotation matrices, and not only about rotations. Not all transformations are rotations, and transformations are not always represented by matrices. So, that article should not be merged into this one. However, a rotation-specific summary of that article is already included in section Rotation matrix#Ambiguities. Paolo.dL (talk) 18:59, 30 May 2013 (UTC)

- Understood. I would make a final suggestion: perhaps the title Ambiguities is to general. Perhaps there is a way to quickly guide the reader to the issue/difference between passive and active transformation. sanpaz (talk) 19:09, 30 May 2013 (UTC)

- People are still changing the matrix to its transpose, so I think we need to add a (possibly hidden) note early in the article. It is plain to me that the example in the text is of an active (alibi) rotation, but editors familiar with passive (alias) rotations think that the article is wrong and make changes before they get to the relevant section (and despite the fact that the working is laid out plainly). Dbfirs 19:13, 30 January 2014 (UTC)

- Understood. I would make a final suggestion: perhaps the title Ambiguities is to general. Perhaps there is a way to quickly guide the reader to the issue/difference between passive and active transformation. sanpaz (talk) 19:09, 30 May 2013 (UTC)

- You welcome. Active and passive transformation is not only about rotation matrices, and not only about rotations. Not all transformations are rotations, and transformations are not always represented by matrices. So, that article should not be merged into this one. However, a rotation-specific summary of that article is already included in section Rotation matrix#Ambiguities. Paolo.dL (talk) 18:59, 30 May 2013 (UTC)

Alias and Alibi Transformations

[edit]I do not find the discussion of alias and alibi rotations to be very helpful to the reader. The following quotes taken from Birkhoff and Mac Lane and by George Francis show that these terms define the point of view of the person using the transformation. They are not inherent to its mathematical formulation.

Birkhoff and Mac Lane[1] provide a useful discussion of transformations which uses the terms alibi and alias to describe the results of the transformations. They say:

- Equation (13) was interpreted above as a transformation of points (vectors), which carried each point X = (xb · · · , x") into a new point Y having coordinates (y., · · · , y") in the same coordinate system. We could equally well have interpreted equation (13) as a change of coordinates. We call the first interpretation an alibi (the point is moved elsewhere) and the second an alias (the point is renamed).

George Francis[2], University of Illinois, elaborates on the idea of alias and alibi transformations to explain the choices taken by Silicon Graphics in the development of their Iris geometric engine. He says,

- The geometers at Silicon Graphics, the company that builds the Iris, do not consider an affine transformation as something that transforms the objects to be displayed. They consider the affine transformation to act on the coordinate system. There is no harm in this attitude, it comes from the realm of movie maker and landscape artist. It is not adapted to the attitude of the industrial designer nor to the mathematician. The two attitudes are referred to as the “alias” and “alibi” approach to coordinate changes. To change coordinates means to give a point a false name. To change its position is to give it an alibi as to its whereabouts.

Prof McCarthy (talk) 23:26, 12 March 2014 (UTC)

- Isn't that exactly the distinction described in the article? Dbfirs 09:29, 13 March 2014 (UTC)

- I do not view this statement "The coordinates of a point P may change due to either a rotation of the coordinate system CS (alias), or a rotation of the point P (alibi)." to be in line with Birkhoff and Mac Lane or George Francis. The "ambiguity" of alias and alibi resides in the viewpoint of the user not the mathematics of the rotation matrix. A person can choose either viewpoint for any transformation. There is nothing in the rotation matrix itself, particularly the minus sign above or below the diagonal, that distinguishes these points of view. Prof McCarthy (talk) 11:55, 13 March 2014 (UTC)

- I don't see the difference. Am I missing something? I agree that nothing in the matrix determines whether an alias or an alibi is intended, or whether the rotation is clockwise or anti-clockwise, but we have to agree on one convention for the article, and the one chosen is the usual one at elementary level (except in computing). Dbfirs 07:48, 14 March 2014 (UTC)

- I recommend introducing the convention when discussing an application, and using the convention meaningful to the application. Prof McCarthy (talk) 13:42, 14 March 2014 (UTC)

- That is a sound policy. This article is about the rotation of vectors, not axes. Where do you think this should be made clearer? Dbfirs 15:41, 14 March 2014 (UTC)

- The article is about the rotation matrix. The rotation of vectors and the rotation of axes are applications of the rotation matrix.Prof McCarthy (talk) 03:07, 15 March 2014 (UTC)

- Yes, I see the point that you are making now. The article chooses to describe examples of rotation of vectors because that is conceptually slightly simpler, and this approach is the one that most people will meet first (unless they programme computers with rotating axes at a very early age). Perhaps the lead could make this clearer, with the information that I relegated to a note moved to the lead and clarified. I'll do this. Dbfirs 07:38, 15 March 2014 (UTC)

- Thank you for your patience with this. Your edits are helpful, but remember the same matrix can be viewed in either way. Prof McCarthy (talk) 10:17, 15 March 2014 (UTC)

- Yes, the example matrices can be either an anticlockwise rotation of the vectors or a clockwise rotation of the axes. I'll add that, too. Dbfirs 13:09, 15 March 2014 (UTC)

Rotations in 2D

[edit]

Just to clarify, the rotations are the same as those in the diagram, a counterclockwise rotation. If for example the angle is 45° the point (1, 0) is rotated to (0.707, 0.707). This is clearly given by

- ,

- .

With θ = 45°. Interchanging the signs of the off-diagonal sin will give a different result, (0,707, -0.707), which is a clockwise rotation, the opposite to the sense described here. This is covered in the Non-standard orientation of the coordinate system section.--JohnBlackburnewordsdeeds 15:38, 11 April 2011 (UTC)

hi ,i'm 111.255.180.117 in your example,the point (1,0) rotate 45°,then it will become (0.707 ,0.707) in "original coordinate" ,but still remain (1,0) in "new coordinate"

but in equation , (x,y) is the value of point in "original coordinate" and (x',y') is value of the "same point" (which didn't rotate) in "new coordinate" the point is , we want to get the value of "same point" in "different coordinate".

the correct equation is

- ,

- . —Preceding unsigned comment added by 111.255.180.117 (talk) 16:28, 11 April 2011 (UTC)

- I'm afraid I don't get your reasoning. In a little more detail here's mine.

- Starting with (1, 0), i.e. x = 1, y = 0.

- θ = 45°, so sin (θ) = cos (θ) = 0.707 (to 3 decimal places, it's √2/2 exactly)

- Put these numbers into the equations, i.e.

- ,

- .

- gives

- x' = 1 × 0.707 - 0 × 0.707 = 0.707

- y' = 1 × 0.707 + 0 × 0.707 = 0.707

- So the result is x' = 0.707, y' = 0.707, or (0.707, 0.707). This corresponds to the diagram where the point rotated counterclockwise from the x-axis is in the positive x-y quadrant, so must have x and y positive.

- Using the equations you give above produces a different result, (0.707, -0.707), in a different quadrant, the result of a clockwise rotation, which does not match the description or the diagram.--JohnBlackburnewordsdeeds 16:41, 11 April 2011 (UTC)

In your opinion ,you rotate the "vector" not the "coordinate", likes (talk) says..... x' and y' is "not" the value in original coordinate ,the are the value in "new coordinate". image a apple put on table in front of you ,if you turn left ,where is the apple?

again , rotation matrix represents the relation between two "different" coordinate. —Preceding unsigned comment added by 111.255.180.117 (talk) 16:57, 11 April 2011 (UTC)

- That is one way to do it, but not how this article does it, as it describes clearly in the article. It also highlights the difference in the section Ambiguities. The way it is done in the article is the most common way of doing it, with fixed coordinates and objects rotating.--JohnBlackburnewordsdeeds 17:08, 11 April 2011 (UTC)

- I agree with JohnBlackburne. We previously discussed a similar topic in this talk page. Paolo.dL (talk) 13:33, 12 April 2011 (UTC)

You should be careful in writing about the matrices. You should check the ref (Swokowski, Earl (1979). Calculus with Analytic Geometry (Prindle, Weber, and Schmidt).) better. — Preceding unsigned comment added by 193.255.88.133 (talk) 19:13, 10 April 2014 (UTC)

- I don't have a copy of that reference, but I think you will find, if you read it very carefully, that it uses a different convention, thus requiring the transpose of our matrices. Dbfirs 20:05, 10 April 2014 (UTC)

- Nope, this part referenced the rotation equations to this book (Swokowski, Earl (1979), I checked it and found that what you keep here is not quite correct. If not convinced, check this: http://math.sci.ccny.cuny.edu/document/show/2685 (page 3)

- Swokowski's example has rotating axes. Our article has rotating vectors. Both approaches are common and valid, as discussed above, but rotating vectors is both more common and easier for a beginner to follow (though if you were first taught rotating axes, you will think differently). I have previously made a couple of alterations to the article to clarify this, but you were correct that the reference was inappropriate at that point. I've moved it to a more appropriate place. Dbfirs 17:50, 26 April 2014 (UTC)

Erroneous statement

[edit]One sentence states:

"As well, there are some irregularities below n = 5; for example, SO(4) is, anomalously, not a simple Lie group, but instead isomorphic to the product of S3 and SO(3)."

The assertion that "SO(4) is . . . isomorphic to the product of S3 and SO(3)" is incorrect. SO(4) as a Lie group is not a direct product.

(As a differentiable manifold, though, SO(4) is indeed diffeomorphic to the cartesian product S3 × P3 (as the quoted statement would imply). This can be seen because every SO(n) is the total space of an SO(n-1) bundle over the sphere Sn-1. Hence SO(4) is an SO(3) bundle over S3. By the clutching construction, the exact bundle in this case is determined by a map f: S2 → SO(3). This map must be homotopic to a constant map, and so the bundle is equivalent to, and therefore diffeomorphic to, a trivial bundle.)

(This might be called a Standard Mistake, since so many mathematicians, upon first seeing the diffeomorphism statement, erroneously assume the Lie group isomorphism statement.)Daqu (talk) 02:01, 24 July 2014 (UTC)

- I have now fixed this problem.Daqu (talk) 13:34, 24 July 2014 (UTC)

Derivation

[edit]Rotation of Axes - Stewart Calculus explains where the matrix coefficients come from. It is not about matrix but reading wikipedia I cannot understand where the coefficients come from. They use two angles, of the rotated system and point in that system. The vector with respect to rotated system has coordinates

X = R cos β Y = R sin β

In standard system, the coordinates are

x = R cos(α+β) = R[cosα cosβ - sinα sinβ] = X cosα - Y sinα y = R cos(α+β) = R[sinα cosβ + sinβ cosα] = X sinα + Y cosα

The way it is exposed in the article, it seems nonsense to me. --Javalenok (talk) 17:26, 15 May 2014 (UTC)

- The confusion possibly arises because the Wikipedia article is about rotation of vectors. Your Stewart Calculus is about rotation of co-ordinate axes, so the derivation will be different. It's easy to show that, for the rotation of vectors, the columns of the matrix are simply the images of the base vectors (unit vectors along the co-ordinate axes). Should we have this as a simple derivation? Dbfirs 07:10, 16 May 2014 (UTC)

- I see no difference between rotation of axis and rotation of vector. It is only a matter of reference and The one located at the rotated coordinates observes the rotating vector. If you can provide a simpler derivation it is even greater. --Javalenok (talk) 14:39, 16 May 2014 (UTC)

- The difference is that the matrix for one is the transpose of the matrix for the other. Some people prefer the vector convention; others prefer the alternative with rotation of the axes. Dbfirs 16:02, 16 May 2014 (UTC)

- Is it covairant vs contravariant? I know how active vs. passive transforms differ. This is only a matter of interpretation. Nowhere I seen that active can be simpler than passive. --Javalenok (talk) 07:10, 20 May 2014 (UTC)

- The difference is sometimes described as alias versus alibi transformations, as in the section above. Which people consider simpler usually depends on which they met first. Dbfirs 18:08, 20 May 2014 (UTC)

- See my fix in the computation above. The rotation of a axis produces our matrix whereas rotation of the vector produces different matrix, as I demonstrate below. Secondly, the derivation in case of rotating the vectors is absolutely identical to rotation of axis. So, even your subjective assessment (this is simpler because I was exposed to it earlier) does not make sense, regardless of whether it makes sense to claim something "easier" when it is easier by such stupid reason.

- The difference is sometimes described as alias versus alibi transformations, as in the section above. Which people consider simpler usually depends on which they met first. Dbfirs 18:08, 20 May 2014 (UTC)

- Is it covairant vs contravariant? I know how active vs. passive transforms differ. This is only a matter of interpretation. Nowhere I seen that active can be simpler than passive. --Javalenok (talk) 07:10, 20 May 2014 (UTC)

- The difference is that the matrix for one is the transpose of the matrix for the other. Some people prefer the vector convention; others prefer the alternative with rotation of the axes. Dbfirs 16:02, 16 May 2014 (UTC)

- I see no difference between rotation of axis and rotation of vector. It is only a matter of reference and The one located at the rotated coordinates observes the rotating vector. If you can provide a simpler derivation it is even greater. --Javalenok (talk) 14:39, 16 May 2014 (UTC)

If β is again the angle to the vector in the new (X,Y) coordinates but these axis were rotated -α wrt (x,y) then (x,y) coordinates of the vector will be

x = r cos(β-α) = r[cosβ cosα + sinβ sinα] = X cosα + Y sinα y = r sin(β-α) = r[sinβ cosα - sinα cosβ] = -X sinα + Y cosα

What if β is angle of the vector in (x,y) and (X,Y) is α-rotated wrt (x,y)? Then, vector coordinates in (X,Y) are (X = R cos(β-α), Y = R sin(β-α)), identical to the previous case. If we specify vector as β angle in (x,y) again and rotate (X,Y) α clockwise then we will receive our article matrix again, I suppose, because vector angle will be β+α in (X,Y).

So, our matrix stems from rotation of axis, not vectors. If you however will rotate vectors in a fixed coordinate system (x,y), say it was initially at angle β and then rotated to β+α, the new coordinates of the vector changed from (x = cos β, y = sin β) to

see above. Wait this is our matrix. Ok, you will need to flip the sign when start from angle β and rotate vector to β-α. So, you see, it does not matter whether we rotate axis or vector, derivation and result is the same. No need to flip anything. I wonder which also stupid excuses you can appeal to to defend your idee fixe. Wait, I feel that I am starting to understand why you associate the matrix with vector rotation and say why it is simpler. I still would like to have derivation. --Javalenok (talk) 11:50, 18 August 2014 (UTC)

- I'm not sure what you are saying here, but you seem to have made the simple English in the article less coherent. Dbfirs 20:49, 18 August 2014 (UTC)

- What do you mean less coherent? I have related the statement "and the above matrix also represents a rotation of the axes clockwise through an angle" with your discussion of alibi later in the text. I have related the point positions with their representation as vectors and vectors as coordinates in other wikipedia articles. How increased coherence reduces the coherence? Is white = black and vice versa in your Orwell world? --Javalenok (talk) 10:01, 19 August 2014 (UTC)

Why do people hate simple derivation, https://en.wikipedia.org/w/index.php?title=Rotation_matrix&diff=621891816&oldid=621795569? Why the fuck do you hate derivation so much? It seems like you want to keep it in secret. Your 2 dimensions bears no infomration. When I look at the rotation matrix, I go for wikipedia to find out how it is derived. Why the fuck you produced 10 kilotonnes of text, detailing every fine detail related to rotation, all kinds of non-standard axis orientations, but cannot simply permit simple 2D matrix derivation in the text? What the fuck? --Javalenok (talk) 09:48, 19 August 2014 (UTC)

- I agree, your addition makes the lead far less clear, its just badly written. As for the derivation it's unnecessary and unhelpful; thinking of the initial point as being rotated about the origin is only one way of thinking of it and not a standard way. It's also badly formatted but fixing that would make it take far too much space. The whole section reads far better without it as it's easier to see how the different matrices relate. Finally please refrain from using profanities. if you cannot express yourself civilly it is best not to do so at all; instead take a break from editing until you you are calmer.--JohnBlackburnewordsdeeds 12:03, 19 August 2014 (UTC)

- It is nice to hear the proposal to be calmer from menace and to leave from the oppressor. Feel pain? - Shut up, this is what we ultimately want from you. What does "less clear" mean? Can you say more exactly? I have just added a couple of appropriate links in the running text. This can only add to clearity. Derivation unnecessary for whom? For the problem set in your textbook? I have told you that whenever I see the rotation matrix, I want to refresh where it comes from. How is its availability is useless? I can find here everything, from Euler angles and quantum quanetrions in non-standard axes but all I have about 2D matrix is a vector, originally aligned with x-axis rotated at some angle. What is ever point of that example? Why not rotate a zero vector? It is even easier: matrix always map (0,0) to (0,0)! Euler angles and non-standard axis in quanterions spaces is simple and informative but derivation of basic 2D case, which should be understandable by simple, non-math undergraduate is "too complex and confusing". In which world do you live? Which criteria do you use to decide what is simple and informative? How your vector along x axis, which bears 0 information, is more information? You demonstrate how vector, originally aligned with x-axis is rotated. Why arbitrary point rotation is less informative? Are you crazy? Matrix is about arbitrary point rotation, not about the points in the x-axis. I do not understand how you defend that point by

Saying that "understanding the origins of R precludes drawing parallels between different matrices" is as stupid as to say "understanding the operation of diesel will make you harder to see the relationship between different models of combustion engines". Start demonstrating perfect clarity. --Javalenok (talk) 13:55, 19 August 2014 (UTC)Thinking of the initial point as being rotated about the origin is only one way of thinking of it and not a standard way.

- It is nice to hear the proposal to be calmer from menace and to leave from the oppressor. Feel pain? - Shut up, this is what we ultimately want from you. What does "less clear" mean? Can you say more exactly? I have just added a couple of appropriate links in the running text. This can only add to clearity. Derivation unnecessary for whom? For the problem set in your textbook? I have told you that whenever I see the rotation matrix, I want to refresh where it comes from. How is its availability is useless? I can find here everything, from Euler angles and quantum quanetrions in non-standard axes but all I have about 2D matrix is a vector, originally aligned with x-axis rotated at some angle. What is ever point of that example? Why not rotate a zero vector? It is even easier: matrix always map (0,0) to (0,0)! Euler angles and non-standard axis in quanterions spaces is simple and informative but derivation of basic 2D case, which should be understandable by simple, non-math undergraduate is "too complex and confusing". In which world do you live? Which criteria do you use to decide what is simple and informative? How your vector along x axis, which bears 0 information, is more information? You demonstrate how vector, originally aligned with x-axis is rotated. Why arbitrary point rotation is less informative? Are you crazy? Matrix is about arbitrary point rotation, not about the points in the x-axis. I do not understand how you defend that point by

- Keep in mind two things that by simply repeating that something is unclear it does not clarify at all what is unclear exactly, what is the the problem. Be specific. Also,

This is (c) Henri Poincaré says considering general case of rotating arbitrary vector/point is immensely more interesting than your single x-aligned vector rotated because it covers infinitely more cases (rotated points). The 2D matrix derivation is also much more important than all those quanterions and Euler angles that you treat later because it is the most basic case, exposed to 1000 times more people (including those who later learn quanterions and euler angles) and everyone has question: how did they get that? Don't they cheat us? Does it apply to every point in the plane? Other cases are less important because they are more rare and interesting to handful of experts in the field and can be derived once you know how 2D rotation is derived. --Javalenok (talk) 14:46, 19 August 2014 (UTC)The most interesting facts are those which can be used several times, those which have a chance of recurring... Which, then, are the facts that have a chance of recurring? In the first place, simple facts.

- Keep in mind two things that by simply repeating that something is unclear it does not clarify at all what is unclear exactly, what is the the problem. Be specific. Also,

- Rotation of the base vectors is sufficient to determine the rotation of any other point in the plane. A more complex and badly-expressed derivation is not helpful as a basic introduction. Why do you always get so upset at any criticism? Dbfirs 20:16, 19 August 2014 (UTC)

- (I admit that, if I were editing the Russian Wikipedia, the results would be much less coherent than your edits in English.) Dbfirs 20:20, 19 August 2014 (UTC)

- You rotate only one of the basis vectors. Therefore, the article has no relationship to what you say me here, not to speak about the derivation -- the matrix just comes out from nothing, without any reason. So, your article does not convey any information, regardless of the language. I can write the same in Russian. It will be similarly non-informative. I will try to remember that "cohesive" means "useless, meaningless and non-informative". --Javalenok (talk) 10:56, 3 September 2014 (UTC)

- I disagree with your assessment of the article, and I've no objection to a simple derivation (no need to make it complicated), but the English must be coherent, not a Google translation (and I live in 2014, not 1984) Dbfirs 12:07, 4 September 2014 (UTC)

- I appended the "this is not a forum" template on top of this page: I am noting that the conversation is generating more heat than light. I believe it would be best for all involved to desist from direct edits of the specific contested sections for a month or so. The sections are fine as they stand, and gilding the lily is likely to lead to grief. This is standard textbook material, and not a venue for pedagogical innovation. It would be sad if peremptory measures had to be taken instead of thoughtful restraint. In a month's time, let any proposed improvement be explicitly stated in a new section in this talk page, and consensus vetting of it or not proceed.Cuzkatzimhut (talk) 13:53, 4 September 2014 (UTC)

- Which standard textbook material does tell us that derivation is "too complex to be included in wikipedia page because wikipedia is not a forum". Provide a prove link. I do not believe that any sane textbook can teach such nonsense. --Javalenok (talk) 11:57, 9 September 2014 (UTC)

Seek consensus in October. WP:TE & WP:NOTGETTINGIT. To paraphrase Pudd'nhead Wilson, if I had half of that book, I'd burn it. Ambition may well grow on what it feeds on.Cuzkatzimhut (talk) 13:18, 9 September 2014 (UTC)

Are 3D rotations under heading Basic rotations incorrect

[edit]I couldn't get these transforms to make sense so I referred to another source (http://mathworld.wolfram.com/RotationMatrix.html).

Seems the signs on the 'sine' components are transposed. The Wolfram transformations worked for me.

I am very much a newbie, so I didn't edit the page, but I thought I'd raise a flag.

Dan Dlpalumbo (talk) 14:04, 11 December 2014 (UTC)

- Your linked article explains the difference between rotation of a vector and rotation of axes for 2-D but then goes on to show only rotation of axes for 3-D. The Wikipedia article rotates vectors, not axes, hence the difference. Dbfirs 14:14, 11 December 2014 (UTC)

Suggested merge of section Group theory into other articles.

[edit]The section Group theory doesn't belong here. It is too far afield, with its spinors, exponential maps and covering groups, from the relatively simple concept of a rotation matrix. It should be split up, rewritten, and merged into Rotation group SO(3) and Rotation group (aka orthogonal group). I'll go about to do this in a couple of days from now unless protests become too violent. YohanN7 (talk) 16:17, 13 December 2014 (UTC)

- No violence proffered... It looks like a good idea, and Rotation group SO(3) appears like the optimal target, but there should be a very short residual section with basic formulas, like the last one of 9.3, for example, summarizing basic usable results here, and referring copiously to the departed material in the main article, Rotation group SO(3) . Cuzkatzimhut (talk) 17:17, 13 December 2014 (UTC)

- I am in violent agreement. The subsection Baker–Campbell–Hausdorff formula is slightly confused, so the first order of business is to fix that (in place). The last paragraph applies really more to the matrix exponential section than to BCH. YohanN7 (talk) 17:28, 13 December 2014 (UTC)

This page has 100+ watchers and a couple of thousand views per day, so it is best to wait a while with major operations. But, as a piece of motivation for the proposed move/merge; there is no-one reading this article that could possibly be interested in reading the whole article. Those readers struggling with active/passive rotations will not be able to appreciate group theory, while those able to digest the group theory couldn't be bothered with reading the first half or the article. Besides, the article is too big. YohanN7 (talk) 17:46, 13 December 2014 (UTC)

The last paragraph in Baker–Campbell–Hausdorff formula confuses me. What does it refer to? It may refer to the trigonometric formula from the paragraph above. It may not, in which case it is nonsense. There are, AFAIK, no implications between the validity of the BCH formula and simple connectedness. YohanN7 (talk) 18:48, 13 December 2014 (UTC)

- Yes, it refers to Z = αA + βB + γ(A × B) , where I think you mean A--> X, and B-->Y. In this paragraph, the coefficients α, β, γ, are computed with some effort in the ref of Engo. However, all structure constants of this Lie algebra are the same for any representation, thus including the doublet, normally associated with SU(2), but of course, it is the spinor rep of SO(3) as well. So the very same coefficients α, β, γ, follow much more directly, indeed by inspection, in the Pauli matrices article, as linked. (The final parenthetical statement is a pedantic aside... Only the excessively curious or enterprising reader would try to reserve the formula in the quartet rep..., etc.. But a fact is a fact and who knows where it would lead.) For the purposes of this multiplication, the topological differences between SO(3) and SU(2) do not enter... Both represent motions continuously connected to the identity. Cuzkatzimhut (talk) 20:15, 13 December 2014 (UTC)

- OK, I supplanted A--> X, and B-->Y, and removed the universal cover jazz that might confuse the novice. The statement now amounts to X = iθ n̂ . J where now J are either the 3x3 matrices discussed here, or the three σ/2 which obey the same commutation relations, and so have the same commutators in the BCH expansion. But of course, nobody really sums that, in practice: One just expands the exponential, to a polynomial in the universal Lie algebra, and multiplies two exponentials as polynomials. Now these expansions are not the same in each representation, as they are neither group or algebra identities. The point of the final paragraph is that Pauli matrix multiplication is what every undergraduate is taught, so the answer is available by inspection, and one does not really need Rodrigues formula overkill. But the whole paragraph has a footnote flavor to it. Cuzkatzimhut (talk) 20:42, 13 December 2014 (UTC)

- Aha, thanks, now I think see.

- I am a bit slow on the uptake. Never went to school. YohanN7 (talk) 22:02, 13 December 2014 (UTC)

- Aha, thanks, now I think see.

- Yes, absolutely. The coefficient of the identity is the cosine of the new angle, which amounts to the spherical law of cosines, and the coefficient of the sigmas has to be the new axes times the sine of same. Frankly, we were asked to do this as an exercise at school, but formal books (such as Biedenharn and Louck, etc.) are too snobbish to provide it... the multiplication law of the simplest nonabelian Lie group at that! Cuzkatzimhut (talk) 22:22, 13 December 2014 (UTC)

While we are at it, there is also the article Axis–angle representation which appears to be begging to be incorporated into this future rearrangement. Cuzkatzimhut (talk) 22:50, 13 December 2014 (UTC)

At long last I have begun. Copy and edit into target first, then think about deletion here is probably best. Lie algebra part now in Rotation group SO(3). YohanN7 (talk) 20:29, 29 December 2014 (UTC)

- Just a note in support of what you are doing. As you will know, this was suggested nearly seven years ago. Dbfirs 09:21, 30 December 2014 (UTC)

The end is near...

[edit]... of the merge of stuff from group theory into Rotation group SO(3). The original group theory section was 5+ pages, now it is 3- as my screen measures it. My original plan was to "move away" rather than to "trim down". The present version of the section has a lot more the flavor of an overview, with some rudimentary basics, some key results, but little motivation or derivation. I kind of like it this way so I wouldn't want to delete it altogether. While the end is near, it isn't here (there is stuff to do in the SU(2) article teased from the BCH section here, see formulae just above), but it is best I let it rest for a little while to hopefully gather more comments on what remains to be done and what should possibly be undone. YohanN7 (talk) 22:49, 1 January 2015 (UTC)

- Looks great. I took the liberty to update the "Main article" headers, since the interested reader is invited to go there for substance. Possibly what is there can be further condensed by a 5% or so...As for comparing the triplet BCH (Engo) to the doublet above and in Pauli matrix, it is just a change of variables, albeit one from hell! If you wish, I could change some Names in the Pauli article and above to avoid predictable confusions of notation. Note that, for the normalization of the generators L to agree with the Pauli matrices, you need L ↔ −iσ/2 , so that, e.g. , calling n̂=u, we identify ia u.σ = −2a u.L , hence θ= −2a (a Pauli, not Engo!). It would be trivial for me go to the Pauli article, and supplant n̂ → û , n̂' → v̂ and n̂' ' → n̂, and use different symbols for a,b,c, (but probably not −θ/2, −φ/2 !) to allow for an easy change of variables form Engo to Pauli. But I would only do it if you needed it in explicit connection. My instinct is to leave it as "an exercise for the student", as long as we don't overwhelm them with ambiguous a,b.c,s designed to confuse them! Cuzkatzimhut (talk) 01:15, 2 January 2015 (UTC)

- A better idea... if you let me, change the notation and fill in the missing expression right above here, in the previous section, so n --> u, m --> v , N--> n , a-->A, b-->B, A-->C, and provide the answers right there, so you don't have to go back to the Pauli article, to be left alone for a while. Cuzkatzimhut (talk) 01:39, 2 January 2015 (UTC)

- Right now my head is spinning (no pun, Sz = 3⁄2), and I need to sleep before I can think clearly (if ever). I suspected that it might not be that easy to come up with the Pauli version. Not only for algebraic manipulations from hell, but also, the Lie algebra map is one-to-one, but at the group level, while (I think so at least, drawing from what I know from the irreducible Lorentz reps that are all faithful, though some are projective) it can be arranged to be one-to-one locally, we do have a projective representation (1:2) of SO(3), and that has worried me a little. Do I make any sense? I don't doubt the expression is true for SU(2), but what bothers me is that I can't see why it must be true. Anything you can fill in here to make life easier is highly appreciated.

- B t w, Engo's paper is available to the public for online reading (see updated ref in Rotation group SO(3). I think the link is legal. YohanN7 (talk) 02:32, 2 January 2015 (UTC)

- We might leave spin 3/2 aside for a moment. The Pauli version follows by inspection... do you want me to write in the intermediate step above? It is the changes of variables to identify to Engo which are messy. But it does identify, as long as the perturbative series in the exponent of BCH works, but, of course, everything breaks down when the Engo angles get to 2π (inspect his formulas there) where the Pauli ones do not, but pick up a singularity as well. Cuzkatzimhut (talk) 12:21, 2 January 2015 (UTC)

- That would be nice. I don't want to burden you. I haven't made any serious attempts, but I would appreciate if you filled it out. At any rate, it would speed things up. I'll try to knit things together in the article(s) after I have fully understood. We should perhaps have a version also for the t-matrices too (see new addition to rotation group SO(3)), which conform to the math convention used in the article whereas the Pauli matrices conform to the physics convention. That way the Pauli version could go into that article (wiki-linked of course), beefing it up. YohanN7 (talk) 14:03, 2 January 2015 (UTC)

- OK, I'll do it above, overwriting your own formula, but without inserting the t's. Actually yours are wrong by a - sign! Compare to Pauli matrices, and specifically sigma-2. This is obvious from normalizing the Pauli matrix algebra by dividing by 2i, not multiplying by it. Cuzkatzimhut (talk) 14:16, 2 January 2015 (UTC)

- I put the - sign in the isomorphism (making the t's conform with the Hall ref). I'll change that, it is only a change of basis.

- On a side note:

- is noteworthy in its own right. Not remarkable, but deserving mention. Bracket series certainly do not behave like power series. YohanN7 (talk) 14:23, 2 January 2015 (UTC)

OK, here

Note I am using the hat to denote unit vectors, not Engo's non-standard usage! The rest is obvious, by inspection: one directly solves for C from the coefficient of I,

the spherical law of cosines. Given C, then, from the coefficient of the sigmas,

Consequently, the composite rotation parameters in the exponent of this group element simply amount to

This is straightforward, and bypasses the lengthy huffing and puffing of Engo. It is worth exploring for both sets what happens at the dangerous points 2π,4π, etc... where formulas present multivalued options and the full angles versus half-angles make a difference (For example, as A=2π, solving for C yields B, but alternatively 2π—B). Perturbatively in "powers" of the generators, or the angles, the Z exponent of the respective Pauli vector and Engo spin 1 methods agree. For identification, as i mumbled above, iA u.σ = −2A u.t, hence θ = —2A, φ = —2B, u.v is the axes' cosine, etc. For example, looking at the X component of Z, we get α = (C/sinC) (sinA/A) cosB . To quadratic order in small angles A and B this may well be ≈

- , I think... Engo keeps some full angles and some half angles, as per my comment above that half angles are often expeditious...

Note that for Pauli σs (so normalized from generators),

- Consider now the exact expressions for α/β and α/γ in both cases, easily shown to be identical, respectively, once the "culprits" involving d and C are divided out:

And, yes, equating the α, β, γ closed expression for Z with the BCH series might be worthwhile, as it dramatizes to one how the same structure constants for commutators would lead to the same closed expression for both spin 1 and spin 1/2, and all spins... the essence of a group identity−−versus wildly differing expansions in the universal Lie algebra! People, including myself, have often run back to such (rare) closed expressions for reassurance of what holds and what does not! (Gilmore's books dwell on it more than others'). Cuzkatzimhut (talk) 14:56, 2 January 2015 (UTC)

- Tnx. I'll probably not be editing more today, real life duties, but will get on with it tomorrow. B t w I notice now that the Pauli article did have a section on this even before. I totally missed that. YohanN7 (talk) 15:13, 2 January 2015 (UTC)

- Do we really need the caveat about structure constants one we agree to stick to either the physics or the math convention (differing by a factor of i in places)? The BCH formula doesn't refer to them and the quantities on the left are sensitive only to how the inner product is defined. Here there is a built in trap, because

- (Engo notation) which is half the usual Hilbert-Schmidt inner product.

I only mumbled about structure constants to remind myself how the Pauli matrices, much easier to calculate with (above 3-liner), connect to the standard normalizations, and the X's of the formula... I had to work at being careful yesterday to match them up, especially α/γ where the 2-->1 generators occur, and their normalization matters to produce the same result for Engo and Pauli vectors. That was part of the notational transition hell I was complaining about, X = iA u⋅σ . But anything that works should be fine... Cuzkatzimhut (talk) 11:32, 3 January 2015 (UTC)

The end is here?

[edit]I gave it a try in the article with a new hidden box. The only thing I haven't verified is that the constant k in the article actually is equal to 1, but the perturbation expansion above seems to show that. Readers (and I) may wonder if the "t-matrix version" (or the Pauli version) is universal, and if not, why not? The expression is simpler than Engo's. YohanN7 (talk) 00:50, 5 January 2015 (UTC)

The kernel of a Lie algebra homomorphism is an ideal, but since so(3) is simple, it has no nontrivial ideals. The result should hold for all representations. YohanN7 (talk) 01:06, 5 January 2015 (UTC)

- Looks nice, except Y= is missing in the 2nd formula line of the hidebox, and in hindsight, the expressions to give are α/γ and β/γ, instead of α/β. But, to a new reader, the dual usage of X and S , and Y and T, etc... would look weird/overkill... note the σ's are hermitean, the t's antihermitean, (not real antisymmetric!) and the a, b, c used in the second hidebox are NOT the a,b,c of the first hidebox of the section (Engo) above... Should I try to condense the hidebox by yet another round of notational changes, both consistent with Engo, above it, and the unfortunately defined a,b,c, of Pauli vector, which I propose to use primes for? Cuzkatzimhut (talk) 01:19, 5 January 2015 (UTC)

- Yes, please do so. (The reason for the unfortunate naming is that I wanted the capital Greek A, B, Γ for what it is used for now, I forgot about the a,b, c of the first hide-box.) YohanN7 (talk) 01:41, 5 January 2015 (UTC)

- OK, will try that, for a little. How about we continue this commentary in the Talkpae of That page, then? Cuzkatzimhut (talk) 01:48, 5 January 2015 (UTC)

- Yes, please do so. (The reason for the unfortunate naming is that I wanted the capital Greek A, B, Γ for what it is used for now, I forgot about the a,b, c of the first hide-box.) YohanN7 (talk) 01:41, 5 January 2015 (UTC)

Quaternions

[edit]It would be nice with a link to quaternions

There is a formula for quaternion -> rotation matrix. This is told to be "left-handed (Post-Multiplied)". This does not make sense for me. "Post-Multiplied" seems to be very OpenGL/Direct3D (computer graphics) oriented, and should not be mentioned. Also, A "Post-Multiplied" matrix M seems to be that the (vector) bases A, B, C for the transformation are placed in each of the rows of the matrix. Hence we must multiply the vector x after the matrix M (xM) which is the opposite of the usual way (Nx, where N is the matrix with bases in each column). (M = N^T). Correct me if this is wrong. — Preceding unsigned comment added by 46.15.111.125 (talk) 11:01, 28 February 2014 (UTC)

Me again... I found this link: http://seanmiddleditch.com/journal/2012/08/matrices-handedness-pre-and-post-multiplication-row-vs-column-major-and-notations/, so I was wrong about Post-Multiplication, Post-Multiplication means "matrix on the left of the vector". Hence Post-Multiplication is the usual way (mathematical notation). False statement above striked out.

However, "Post-Multiplied" in the text should be removed. It does not make sense, since we use the standard mathematical notation when we represent a transformation with a matrix. — Preceding unsigned comment added by 46.15.111.125 (talk) 12:24, 28 February 2014 (UTC)

Agreed that "left-handed (Post-Multiplied)" is quite confusing, especially since the formulas agree with the right-handed basic rotation matrices.--olfactoryScientist (talk) 11:21, 7 May 2014 (UTC)

I'm quite confused with this part. There is often some confusion between pre/post-multiplication and left/right-handeness. This formulation "left-handed (Post-Multiplied)", encourages to think that "Post-Multiplied" is a synonym of "left-handed" just because the matrix is on the left of the multiplied vector. I even have some doubt that the author was doing this confusion himself. Can someone clarify this point ?

I'm also confused about the left handed.Dose this mean a left hand axis?or left handed rule rotations? — Preceding unsigned comment added by 118.9.136.96 (talk) 09:47, 15 May 2016 (UTC)

- The convention used in this article is pre-multiplication (matrix on the left), and right-handed axes. Of course, exactly the same results are obtained if you use the unconventional convention of left-handed axes and post-multiplication. Dbfirs 15:56, 15 May 2016 (UTC)

slight error at introduction

[edit]At the end of the Introduction section said

"The set of all orthogonal matrices of size n with determinant +1 forms a group known as the special orthogonal group SO(n). The most important special case is that of the rotation group SO(3). The set of all orthogonal matrices of size n with determinant +1 or -1 forms the (general) orthogonal group O(n)."

The correct phrase should be

"The set of all orthogonal matrices of size n with determinant +1 forms a group known as the special orthogonal group SO(n). The most important special case is that of the rotation group SO(3). The set of all orthogonal matrices of size n forms the (general) orthogonal group O(n)."

Great job. — Preceding unsigned comment added by 177.43.202.74 (talk) 12:46, 10 November 2016 (UTC)

Edit Request

[edit]As of Apr-19-2017 the first paragraph has the following sentence:"To perform the rotation using a rotation matrix R, the position of each point must be represented by a column vector v, containing the coordinates of the point." This is true - but only because R was previously defined as being derived from the defined Θ (positive = counterclockwise from x-axis; x,y orthogonal). Wouldn't it be better to change this sentence to:"To perform the rotation using this rotation matrix R, the position of each point must be represented by a column vector v, containing the coordinates of the point." And perhaps note that you can do precisely the same rotation with a row vector (x,y) and RT? (as long as we understand that premultiplying R by a row vector produces a row vector while post-multiplying R by a column vector yields a column vector) I note this because a lot of students are only familiar with row vectors, and we should accommodate them, imho, as well as those proficient with the more general vector operations.

Also, a more minor point. A couple of sentences later we have this"... a square matrix R is a rotation matrix if RT = R−1 and det R = 1." Wouldn't it (I think it would) be better to state"if, and only if" here? It makes it quite unambiguous to those familiar with the phrase, while "if" is not quite as clear.98.21.212.86 (talk) 21:45, 19 April 2017 (UTC)

- You are correct, of course. I've made the second change, and clarified to avoid confusion in the first. Before we decide how to make any other changes, could I ask if post-multiplication of a row vector is commonly taught in your part of the world? It is quite difficult to include every possible convention without causing confusion. Dbfirs 00:12, 20 April 2017 (UTC)

- The issue of vector-matrix products is very important for linear algebra. Please review Row_and_column_vectors#Preferred_input_vectors_for_matrix_transformations for some references. Practitioners of Galois geometry have also shown this preference.Rgdboer (talk) 20:49, 20 April 2017 (UTC)

- I agree that row vectors are occasionally used, in fact I've used them many years ago. What I should have asked, is "are row vectors and post-multiplication taught in introductory courses anywhere?" Dbfirs 07:33, 21 April 2017 (UTC)

Do only rotations around axes have a sign?

[edit]In the top left corner are the rotation matrices, in the bottom right corner are the corresponding permutations of the cube with the origin in its center.

I have added the example on the right to the article. Intuitively I am tempted to call the result a positive 120° rotation around the main diagonal, as it is anti-clockwise seen from the positive side of the main diagonal. Does anyone know if the notion of positive or negative is well defined for rotations that are not around an axis? (I guess this boils down to the question if points on the unit sphere that are not on an axis can reasonably be called positive or negative.) Greetings, Watchduck (quack) 10:26, 19 May 2017 (UTC)

Mistake in rotation matrix in introduction

[edit]The introduction says that the 2x2 matrix it presents is for counterclockwise rotation but I believe the matrix presented there is for clockwise rotation given what is stated later in the article. — Preceding unsigned comment added by 94.12.234.26 (talk) 19:15, 21 February 2013 (UTC)

Agreed, I found that all the matrix transformations in this article were for clockwise rotations. I don't trust myself, though, so if this is thirded, please change it. 24.217.199.106 (talk) 20:34, 15 July 2013 (UTC)

Yeah I agree, the matrix given in the introduction is clearly for a clockwise rotation — Preceding unsigned comment added by 82.32.167.51 (talk) 12:00, 1 May 2014 (UTC)

- That was due to an error in the previous edit. I've reverted back to before that edit, as giving the matrix for a counter-clockwise rotation is better: more consistent with the rest of the angle and showing the 'normal' way of rotating in a positive sense.--JohnBlackburnewordsdeeds 12:14, 1 May 2014 (UTC)

That mistake is still there.... — Preceding unsigned comment added by 193.145.230.2 (talk) 08:51, 26 October 2016 (UTC)

- No! Rotate (1,0).

Cuzkatzimhut (talk) 10:17, 26 October 2016 (UTC)

The matrix presented is for clockwise rotation. A 45 degree rotation for the point (3,3) produces

[0.7071 -0.7071; 0.7071 0.7071]*[3;3] = [0;sqrt(18)] clearly the y' axis is non-zero. Daniel Villa, Professional Engineer — Preceding unsigned comment added by 198.102.155.120 (talk) 16:47, 8 June 2017 (UTC)

- Hope you're not constructing any of my bridges

. The image point is on the positive y-axis so this is clearly a counter-clockwise rotation. There is nothing wrong with the formula.--Bill Cherowitzo (talk) 17:15, 8 June 2017 (UTC)

. The image point is on the positive y-axis so this is clearly a counter-clockwise rotation. There is nothing wrong with the formula.--Bill Cherowitzo (talk) 17:15, 8 June 2017 (UTC)

These signs are not correct, as everyone has already pointed out. I figured the more voices the merrier. This matrix rotates a vector clockwise, if counter-clockwise is defined as positive as is typical. Most everyone seems to think so in the talk section. If you say that two vectors r and r_prime are both vectors describing the same point in two 2D-coordinate systems, one rotated from the other CCW by an angle theta, you can equate them. With the dot product and orthogonality conditions you will see that this is so, because cos(pi/2 - theta) = sin(theta) and cos(pi/2 + theta) = -sin(theta).

I want a bear as a pet (talk) 19:49, 28 October 2017 (UTC)

- The signs were perfectly correct until you changed them. Please stop. Dbfirs 20:00, 28 October 2017 (UTC)

They are just as perfectly correct after I changed them. Also more typical. Counterclockwise rotations are thought of as positive, in accordance with the right-hand rule. Thumb points up. I want a bear as a pet (talk) 20:06, 28 October 2017 (UTC)

- Please read the extensive explanation on this page, and stop changing the signs. You are thinking of rotation of axes. Dbfirs 20:08, 28 October 2017 (UTC)

I concede, and now realize I was wrong. I misunderstood that this article is written in the sense that the coordinate system is held fixed, and jumped to the conclusion that I was right. I see now that I was speaking of rotations of coordinate systems instead. My apologies.

I want a bear as a pet (talk) 20:21, 28 October 2017 (UTC)

- That's OK. Half of us were taught rotation of vectors, and the other half were taught rotation of axes, hence the long disagreements on signs. It is confusing. Dbfirs 20:25, 28 October 2017 (UTC)

Determining the axis

[edit]The more I re-read this section, the more problematic it is. Is it really all that useful to use eigenvectors/values here? Not useful at all for me. Cutting to my major complaint: The statement:"Every rotation in three dimensions is defined by its axis — a direction that is left fixed by the rotation — and its angle — the amount of rotation about that axis (Euler rotation theorem). This is very misleading. The problem is that an axis is not a direction. An axis has a direction, but it also has a location, it is NOT a vector.98.21.66.236 (talk) 21:01, 11 May 2017 (UTC)

- I agree. I'll make an adjustment in the wording. Dbfirs 21:22, 11 May 2017 (UTC)

- Thanks! But my other complaint is that an axis is not a vector. Specifically, Rotation Matrices as defined here do NOT cover the general case where points are rotatated around arbitrary lines, but covers only lines which intersect (0,0,0). That is to say that a Rotation Matrix can not handle the general case where the rotation axis is off-set from the origin. (For each line intersecting (0,0,0) there are an infinite number of lines which do not. So, there's lots of cases (!) that can't be dealt with without translations of the axis.) It is what it is, I just think that needs to be made clearer than the text now does. My two cents.98.21.66.236 (talk) 20:35, 15 May 2017 (UTC)

- The article specifically says: "Since matrix multiplication has no effect on the zero vector (the coordinates of the origin), rotation matrices can only be used to describe rotations about the origin of the coordinate system."

- Could you point out where there is a remaining error confusing an axis with a vector? Dbfirs 21:19, 15 May 2017 (UTC)

- The article specifically says: "Since matrix multiplication has no effect on the zero vector (the coordinates of the origin), rotation matrices can only be used to describe rotations about the origin of the coordinate system."

- Thanks! But my other complaint is that an axis is not a vector. Specifically, Rotation Matrices as defined here do NOT cover the general case where points are rotatated around arbitrary lines, but covers only lines which intersect (0,0,0). That is to say that a Rotation Matrix can not handle the general case where the rotation axis is off-set from the origin. (For each line intersecting (0,0,0) there are an infinite number of lines which do not. So, there's lots of cases (!) that can't be dealt with without translations of the axis.) It is what it is, I just think that needs to be made clearer than the text now does. My two cents.98.21.66.236 (talk) 20:35, 15 May 2017 (UTC)

As stated, the magnitude of the given "axis of rotation" is zero when the angle of rotation is 180 degrees. Such a rotation certainly has an axis of rotation and it's certainly not the zero vector. It would be helpful to state a criterion for the validity of the given formula (e.g. -1 not an eigenvalue, matrix not diagonal) and state an alternate formula valid in these cases.

75.50.53.169 (talk) 02:36, 17 March 2018 (UTC)dan

Familton

[edit]The following was removed:

- The rotation matrix can be derived from the Euler Formula, by considering how a complex number is transformed under multiplication by another complex number of unit modulus. See (Familton, 2015) ref name=Familton>Familton, Johannes C. (2015). "Quaternions: a history of complex noncommutative rotation groups in theoretical physics". arXiv:1504.04885 [physics.hist-ph]., page 50</ref for a complete derivation.

The cited reference Quaternions: A History of Complex Non-commutative Rotation Groups in Theoretical Physics by Johannes C. Familton (2015) is not a reliable source:

- No mention of Lectures on Quaternions (1853), only Elements of Quaternions (1866)

- "It appears that Hamilton was never able to find a satisfactory interpretation how quaternions were related to 'vectors'." (page 13)

- Calls the Quaternion Society a "cult".

The assertion about complex numbers and Euler's formula has been filled out now in the article. — Rgdboer (talk) 02:48, 29 March 2018 (UTC)

Familton's thesis is now cited at History of quaternions. — Rgdboer (talk) 19:12, 30 March 2018 (UTC)

![{\displaystyle \alpha X+\beta Y+\gamma [X,Y]=X+Y+{\tfrac {1}{2}}[X,Y]+{\tfrac {1}{12}}[X,[X,Y]]-{\tfrac {1}{12}}[Y,[X,Y]]+\cdots }](https://wikimedia.org/api/rest_v1/media/math/render/svg/dadd77166f5e00858e66bbafbcb4f32a8d444109)