Block-matching algorithm

A Block Matching Algorithm is a way of locating matching macroblocks in a sequence of digital video frames for the purposes of motion estimation. The underlying supposition behind motion estimation is that the patterns corresponding to objects and background in a frame of video sequence move within the frame to form corresponding objects on the subsequent frame. This can be used to discover temporal redundancy in the video sequence, increasing the effectiveness of inter-frame video compression by defining the contents of a macroblock by reference to the contents of a known macroblock which is minimally different.

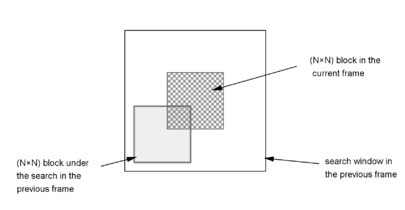

A block matching algorithm involves dividing the current frame of a video into macroblocks and comparing each of the macroblocks with a corresponding block and its adjacent neighbors in a nearby frame of the video (sometimes just the previous one). A vector is created that models the movement of a macroblock from one location to another. This movement, calculated for all the macroblocks comprising a frame, constitutes the motion estimated in a frame.

The search area for a good macroblock match is decided by the ‘search parameter’, p, where p is the number of pixels on all four sides of the corresponding macro-block in the previous frame. The search parameter is a measure of motion. The larger the value of p, larger is the potential motion and the possibility for finding a good match. A full search of all potential blocks however is a computationally expensive task. Typical inputs are a macroblock of size 16 pixels and a search area of p = 7 pixels.

Block-matching and 3D filtering makes use of this approach to solve various image restoration inverse problems such as noise reduction[1] and deblurring[2] in both still images and digital video.

Motivation

[edit]Motion estimation is the process of determining motion vectors that describe the transformation from one 2D image to another; usually from adjacent frames in a video sequence. The motion vectors may relate to the whole image (global motion estimation) or specific parts, such as rectangular blocks, arbitrary shaped patches or even per pixel. The motion vectors may be represented by a translational model or many other models that can approximate the motion of a real video camera, such as rotation and translation in all three dimensions and zoom.

Applying the motion vectors to an image to predict the transformation to another image, on account of moving camera or object in the image is called motion compensation. The combination of motion estimation and motion compensation is a key part of video compression as used by MPEG 1, 2 and 4 as well as many other video codecs.

Motion estimation based video compression helps in saving bits by sending encoded difference images which have inherently less entropy as opposed to sending a fully coded frame. However, the most computationally expensive and resource extensive operation in the entire compression process is motion estimation. Hence, fast and computationally inexpensive algorithms for motion estimation is a need for video compression.

Evaluation Metrics

[edit]A metric for matching a macroblock with another block is based on a cost function. The most popular in terms of computational expense is:

Mean difference or Mean Absolute Difference (MAD) =

Mean Squared Error (MSE) =

where N is the size of the macro-block, and and are the pixels being compared in current macroblock and reference macroblock, respectively.

The motion compensated image that is created using the motion vectors and macroblocks from the reference frame is characterized by Peak signal-to-noise ratio (PSNR),

Algorithms

[edit]This article needs additional citations for verification. (January 2024) |

Block Matching algorithms have been researched since mid-1980s. Many algorithms have been developed, but only some of the most basic or commonly used have been described below.

Exhaustive Search

[edit]This algorithm calculates the cost function at each possible location in the search window. This leads to the best possible match of the macro-block in the reference frame with a block in another frame. The resulting motion compensated image has highest peak signal-to-noise ratio as compared to any other block matching algorithm. However this is the most computationally extensive block matching algorithm among all. A larger search window requires greater number of computations.

Optimized Hierarchical Block Matching (OHBM)

[edit]The optimized hierarchical block matching (OHBM) algorithm speeds up the exhaustive search based on the optimized image pyramids.[3]

Three Step Search

[edit]It is one of the earliest fast block matching algorithms. It runs as follows:

- Start with search location at center

- Set step size S = 4 and search parameter p = 7

- Search 8 locations +/- S pixels around location (0,0) and the location (0,0)

- Pick among the 9 locations searched, the one with minimum cost function

- Set the new search origin to the above picked location

- Set the new step size as S = S/2

- Repeat the search procedure until S = 1

The resulting location for S=1 is the one with minimum cost function and the macro block at this location is the best match.

There is a reduction in computation by a factor of 9 in this algorithm. For p=7, while ES evaluates cost for 225 macro-blocks, TSS evaluates only for 25 macro blocks.

Two Dimensional Logarithmic Search

[edit]TDLS is closely related to TSS however it is more accurate for estimating motion vectors for a large search window size. The algorithm can be described as follows,

- Start with search location at the center

- Select an initial step size say, S = 8

- Search for 4 locations at a distance of S from center on the X and Y axes

- Find the location of point with least cost function

- If a point other than center is the best matching point,

- Select this point as the new center

- If the best matching point is at the center, set S = S/2

- Repeat steps 2 to 3

- If S = 1, all 8 locations around the center at a distance S are searched

- Set the motion vector as the point with least cost function

New Three Step Search

[edit]TSS uses a uniformly allocated checking pattern and is prone to miss small motions. NTSS [4] is an improvement over TSS as it provides a center biased search scheme and has provisions to stop halfway to reduce the computational cost. It was one of the first widely accepted fast algorithms and frequently used for implementing earlier standards like MPEG 1 and H.261.

The algorithm runs as follows:

- Start with search location at center

- Search 8 locations +/- S pixels with S = 4 and 8 locations +/- S pixels with S = 1 around location (0,0)

- Pick among the 16 locations searched, the one with minimum cost function

- If the minimum cost function occurs at origin, stop the search and set motion vector to (0,0)

- If the minimum cost function occurs at one of the 8 locations at S = 1, set the new search origin to this location

- Check adjacent weights for this location, depending on location it may check either 3 or 5 points

- The one that gives lowest weight is the closest match, set the motion vector to that location

- If the lowest weight after the first step was one of the 8 locations at S = 4, the normal TSS procedure follows

- Pick among the 9 locations searched, the one with minimum cost function

- Set the new search origin to the above picked location

- Set the new step size as S = S/2

- Repeat the search procedure until S = 1

Thus this algorithm checks 17 points for each macro-block and the worst-case scenario involves checking 33 locations, which is still much faster than TSS

Simple and Efficient Search

[edit]The idea behind TSS is that the error surface due to motion in every macro block is unimodal. A unimodal surface is a bowl shaped surface such that the weights generated by the cost function increase monotonically from the global minimum. However a unimodal surface cannot have two minimums in opposite directions and hence the 8 point fixed pattern search of TSS can be further modified to incorporate this and save computations. SES [5] is the extension of TSS that incorporates this assumption.

SES algorithm improves upon TSS algorithm as each search step in SES is divided into two phases:

• First Phase :

• Divide the area of search in four quadrants • Start search with three locations, one at center (A) and others (B and C), S=4 locations away from A in orthogonal directions • Find points in search quadrant for second phase using the weight distribution for A, B, C: • If (MAD(A)>=MAD(B) and MAD(A)>=MAD(C)), select points in second phase quadrant IV • If (MAD(A)>=MAD(B) and MAD(A)<=MAD(C)), select points in second phase quadrant I • If (MAD(A)<MAD(B) and MAD(A)<MAD(C)), select points in second phase quadrant II • If (MAD(A)<MAD(B) and MAD(A)>=MAD(C)), select points in second phase quadrant III

• Second Phase:

• Find the location with lowest weight

• Set the new search origin as the point found above

• Set the new step size as S = S/2

• Repeat the SES search procedure until S=1

• Select the location with lowest weight as motion vector SES is computationally very efficient as compared to TSS. However the peak signal-to-noise ratio achieved is poor as compared to TSS as the error surfaces are not strictly unimodal in reality.

Four Step Search

[edit]Four Step Search is an improvement over TSS in terms of lower computational cost and better peak signal-to-noise ratio. Similar to NTSS, FSS [6] also employs center biased searching and has a halfway stop provision.

The algorithm runs as follows:

- Start with search location at center

- Set step size S = 2, (irrespective of search parameter p)

- Search 8 locations +/- S pixels around location (0,0)

- Pick among the 9 locations searched, the one with minimum cost function

- If the minimum weight is found at center for search window:

- Set the new step size as S = S/2 (that is S = 1)

- Repeat the search procedure from steps 3 to 4

- Select location with the least weight as motion vector

- If the minimum weight is found at one of the 8 locations other than the center:

- Set the new origin to this location

- Fix the step size as S = 2

- Repeat the search procedure from steps 3 to 4. Depending on location of new origin, search through 5 locations or 3 locations

- Select the location with the least weight

- If the least weight location is at the center of new window go to step 5, else go to step 6

Diamond Search

[edit]Diamond Search (DS)[7] algorithm uses a diamond search point pattern and the algorithm runs exactly the same as 4SS. However, there is no limit on the number of steps that the algorithm can take.

Two different types of fixed patterns are used for search,

- Large Diamond Search Pattern (LDSP)

- Small Diamond Search Pattern (SDSP)

The algorithm runs as follows:

- LDSP:

- Start with search location at center

- Set step size S = 2

- Search 8 locations pixels (X,Y) such that (|X|+|Y|=S) around location (0,0) using a diamond search point pattern

- Pick among the 9 locations searched, the one with minimum cost function

- If the minimum weight is found at center for search window, go to SDSP step

- If the minimum weight is found at one of the 8 locations other than the center, set the new origin to this location

- Repeat LDSP

- SDSP:

- Set the new search origin

- Set the new step size as S = S/2 (that is S = 1)

- Repeat the search procedure to find location with least weight

- Select location with the least weight as motion vector

This algorithm finds the global minimum very accurately as the search pattern is neither too big nor too small. Diamond Search algorithm has a peak signal-to-noise ratio close to that of Exhaustive Search with significantly less computational expense.

Adaptive Rood Pattern Search

[edit]Adaptive rood pattern search (ARPS) [8] algorithm makes use of the fact that the general motion in a frame is usually coherent, i.e. if the macro blocks around the current macro block moved in a particular direction then there is a high probability that the current macro block will also have a similar motion vector. This algorithm uses the motion vector of the macro block to its immediate left to predict its own motion vector.

Adaptive rood pattern search runs as follows:

- Start with search location at the center (origin)

- Find the predicted motion vector for the block

- Set step size S = max (|X|,|Y|), where (X,Y) is the coordinate of predicted motion vector

- Search for rood pattern distributed points around the origin at step size S

- Set the point with least weight as origin

- Search using small diamond search pattern (SDSP) around the new origin

- Repeat SDSP search until least weighted point is at the center of SDSP

Rood pattern search directly puts the search in an area where there is a high probability of finding a good matching block. The main advantage of ARPS over DS is if the predicted motion vector is (0, 0), it does not waste computational time in doing LDSP, but it directly starts using SDSP. Furthermore, if the predicted motion vector is far away from the center, then again ARPS saves on computations by directly jumping to that vicinity and using SDSP, whereas DS takes its time doing LDSP.

References

[edit]- ^ Dabov, Kostadin; Foi, Alessandro; Katkovnik, Vladimir; Egiazarian, Karen (16 July 2007). "Image denoising by sparse 3D transform-domain collaborative filtering". IEEE Transactions on Image Processing. 16 (8): 2080–2095. Bibcode:2007ITIP...16.2080D. CiteSeerX 10.1.1.219.5398. doi:10.1109/TIP.2007.901238. PMID 17688213. S2CID 1475121.

- ^ Danielyan, Aram; Katkovnik, Vladimir; Egiazarian, Karen (30 June 2011). "BM3D Frames and Variational Image Deblurring". IEEE Transactions on Image Processing. 21 (4): 1715–28. arXiv:1106.6180. Bibcode:2012ITIP...21.1715D. doi:10.1109/TIP.2011.2176954. PMID 22128008. S2CID 11204616.

- ^ Je, Changsoo; Park, Hyung-Min (2013). "Optimized hierarchical block matching for fast and accurate image registration". Signal Processing: Image Communication. 28 (7): 779–791. doi:10.1016/j.image.2013.04.002.

- ^ Li, Renxiang; Zeng, Bing; Liou, Ming (August 1994). "A New Three-Step Search Algorithm for Block Motion Estimation". IEEE Transactions on Circuits and Systems for Video Technology. 4 (4): 438–442. doi:10.1109/76.313138.

- ^ Lu, Jianhua; Liou, Ming (April 1997). "A Simple and Efficient Search Algorithm for Block-Matching Motion Estimation". IEEE Transactions on Circuits and Systems for Video Technology. 7 (2): 429–433. doi:10.1109/76.564122.

- ^ Po, Lai-Man; Ma, Wing-Chung (June 1996). "A Novel Four-Step Search Algorithm for Fast Block Motion Estimation". IEEE Transactions on Circuits and Systems for Video Technology. 6 (3): 313–317. doi:10.1109/76.499840.

- ^ Zhu, Shan; Ma, Kai-Kuang (February 2000). "A New Diamond Search Algorithm for Fast Block-Matching Motion Estimation". IEEE Transactions on Image Processing. 9 (12): 287–290. Bibcode:2000ITIP....9..287Z. doi:10.1109/83.821744. PMID 18255398.

- ^ Nie, Yao; Ma, Kai-Kuang (December 2002). "Adaptive Rood Pattern Search for Fast Block-Matching Motion Estimation" (PDF). IEEE Transactions on Image Processing. 11 (12): 1442–1448. Bibcode:2002ITIP...11.1442N. doi:10.1109/TIP.2002.806251. PMID 18249712.